6 Null Hypothesis Significant Testing

Null hypothesis significance testing (NHST) is a controversial approach to testing hypotheses. It is a commonly-used approach in psychological science.

Simply, NHST begins with an assumption, a hypothesis, about some effect in a population. Data is collected that is believed to be representative of that population (see previous chapter on Samples and Populations). Should the data not align with the null hypothesis, it is taken as evidence against it.

There are several concepts related to NHST and its misconceptions that need to be addressed to facilitate your understanding and research.

6.1 p-values

Prior to exploring p-values, let’s ensure you have a basic understanding of probability notation. I recommend you review the chapter on probability first. Back to the notation…:

For example, the probability of flipping a coin and getting a heads,

Additionally, I could use the notation for a conditional probability:

For example, what if I provided more details in the previous coin-flipping example? The coin is not a fair coin. Well, the original probability will only be our best guess if the coin is fair. That is, p(heads|fair coin) = .5. However, with the new information, p(heads|unfair coin)

The reverse of a conditional probability is not always equal to the original conditional probability. That is,

Do you think this is equal to the probability that someone is the Prime Minister of Canada, given that they are Canadian: p(Prime Minister|Canadian)? I would argue that the former is

- p(Canadian|Prime Minister) = 1.00

- p(Prime Minister|Canadian)=.0000000263

It is imperative understand conditional probability and that the reverse conditional probability likely differs from the initial.

A key feature of NHST is that the null hypothesis is assumed to be true. Given this assumption, we can estimate how likely a set of data are. This is what a p-value tells you.

The p-value is the probability of obtaining data as or more extreme than you did, given a true null hypothesis. We can use our notation:

Where D is our data (or more extreme) and

When the p-value meets some predetermined threshold, it is often referred to as statistical significance. This threshold has typically, and arbitrarily been

…beginning with the assumption that the true effect is zero (i.e., the null hypothesis is true), a p-value indicates the proportion of test statistics, computed from hypothetical random samples, that are as extreme, or more extreme, then the test statistic observed in the current study.

or, stated another way:

The smaller the p-value, the greater the statistical incompatibility of the data with the null hypothesis, if the underlying assumptions used to calculate the p-value hold. This incompatibility can be interpreted as casting doubt on or providing evidence against the null hypothesis or the underlying assumptions.

Simply, a p-values indicates the probability of your, or more extreme, test statistic, assuming the null:

| Reality | |||

|---|---|---|---|

| No Effect ( |

Effect ( |

||

| Research Result | No Effect ( |

Correctly Fail to Reject |

Type II Error ( |

| Effect ( |

Type I Error ( |

Correctly Reject |

When we conduct NHST, we are assuming that the null hypothesis is indeed true, despite never truly knowing this. As noted, a p-value indicates the likelihood of your or more extreme data given the null.

6.1.1 If the null hypothesis is true, why would our data be extreme?

In inferential statistics we make inferences about population-level parameters based on sample-level statistics. For example, we infer that a sample mean is indicative of and our best estimate of a population mean.

In, NHST, we assume that population-level effects or associations (i.e., correlations, mean differences, etc.) are zero, null, nothing at a population level (note: this is not always the case). If we sampled from a population whose true effect or association was

A p-value is a probability value. It is an italicized p. Specifically, the probability of obtaining your or more extreme data given a true null hypothesis.

While

If we took 10,000 samples of size

The above shows the distribution of 10,000 correlation coefficients derived from simulated samples from a population that has

While we used simulated data from a hypothesized population, math people have derived formulas that represent the approximate shape of the curve for infinite samples. We don’t need to know the calculus behind it, but the curve for a null population correlation (

In the above, the extreme 5% of the curve is associated with a correlation of

| Participant | x | y |

|---|---|---|

| 1 | -1.4759696 | -0.9109435 |

| 2 | 0.5658273 | -1.8701988 |

| 3 | 0.1805740 | 0.2642193 |

| 4 | -0.6255420 | 1.9641472 |

| 5 | -0.4936539 | -0.6887534 |

| 6 | 1.6158737 | 1.4952663 |

| 7 | -0.7168520 | -0.4804542 |

| 8 | 0.4519491 | 0.4608354 |

| 9 | -0.2398118 | 0.1772640 |

| 10 | 0.8316019 | 0.4042273 |

| 11 | -0.6183224 | -0.7441028 |

| 12 | -0.5682177 | -0.3891823 |

| 13 | 0.7711289 | 0.5722868 |

| 14 | -2.6018589 | -1.3421923 |

| 15 | -0.5064533 | -1.0413429 |

| 16 | -0.0043281 | 1.5822809 |

| 17 | 1.3737851 | 0.6665896 |

| 18 | 0.9755060 | -0.6206455 |

| 19 | 0.5090296 | 0.1514108 |

| 20 | 0.5757341 | 0.3492881 |

The following are the results of a correlation test on this data.

| Correlation Coefficient | p-value |

|---|---|

| 0.444 | 0.05 |

These results suggest the correlation in the sample is

The p-value indicates that given a true null hypothesis, we would expect a correlation coefficient as big or bigger than ours only 5% of the time.

In the distribution of sample test statistics above, this aligns with the fact that 5% of the statistics (2.5% in each tail) were at least

Because the probability of this data is quite low (i.e., we obtained an extreme statistic), given

6.1.2 Why is

You have often looked for “

The yellow region represents the extreme 1% of the distribution (0.5% per tail). The red region is where the original regions were for

So, we set the criterion of

In short, p-values are the probability of getting a set of data or more extreme given the null. We compare this to a criterion cut-off,

. If our data is very improbable given the null, so much that is is less than our proposed cutoff, we say it is statistically significant.

6.1.3 Misconceptions

Many of these misconceptions have been described in detail elsewhere (e.g., Nickerson, 2000). I visit only some of them.

6.1.3.1 1. Odds against chance fallacy

The odds against chance fallacy suggests that a p-value indicates the probability that the null hypothesis is true. For example, someone might conclude that if their

Jacob Cohen outlines a nice example wherein he compares

If you want

6.1.3.2 2. Odds the alternative is true

In NHST, no likelihoods are attributed to hypotheses. Instead, all p-values are predicated on

6.1.3.3 3. Small p-values indicate large effects

This is not the case. p-values depend on other things, such as sample size, that can lead to statistical significance for minuscule effect sizes. For example, consider the results of the following two correlations:

Result from test 1:

| r | p | Method |

|---|---|---|

| 0.1 | 0.0015441 | Pearson's product-moment correlation |

Result from test 2:

| r | p | Method |

|---|---|---|

| 0.1 | 0.3222174 | Pearson's product-moment correlation |

In the above, the two tests have the exact same effect size and correlation statistics,

As a result, it is important to not interpret p-values alone. Effect sizes and confidence intervals are much more informative.

6.2 Power

Whereas p-values rest on the assumption that the null hypothesis is true, the contrary assumption, that the null hypothesis is false (i.e., an effect exists), is important for determining statistical power. Statistical power is defined as the probability of correctly rejecting the null hypothesis given an true population effect size and sample size, or more formally:

Statistical power is the probability that a study will find p <

IF an effect of a stated size exists. It’s the probability of rejecting when is true. (Cumming & Calin-Jageman, 2016)

See the following figure for a depiction, where alpha=

This plot illustrates the concept of statistical power by comparing the null and alternative distributions. The red curve represents the null hypothesis (

In contrast, the dark blue shaded area under the alternative distribution to the left of the critical value represents the Type II error rate (

We can conclude from this definition that if your statistical power is low, you will not likely reject

Before proceeding to the next example, please note that you will often see the symbol ‘rho’, which is represented by the Greek symbol

Consider a researcher who is interested in the association substance use (SU) and suicidal behaviors (SB) in Canadian high school students. They design a study and use the NHST framework. Under this framework, they set the following hypotheses:

Let’s assume that the true correlation between SU and SB is

Every faint gray dot represents a student. Their score on SU is on the y-axis, while their score on SB is on the x-axis. We can see a trend where students with higher SU also have higher, on average, SB. So, it appears that as substance use increases, so do suicidal behaviors. Although we aren’t so omniscient in the real world, the population correlation here is

However, before conducting the study, the researcher conducts a power analysis to determine an appropriate sample size required to adequately power their study. They want to have a good probability of rejecting the null, if it were false (here, we know this is the case). To calculate the appropriate sample size, they will require: 1) pwr package to conduct their power analysis. This package is very useful; you can insert any three of the required four pieces of information (alpha, power, estimated sample size, and sample size) to calculate the missing piece. More details on using pwr are below. For our analysis, we get:

The results of this suggest that we need a sample of about 85 people (rounding up from

What does this mean? It means that if the true population correlation was

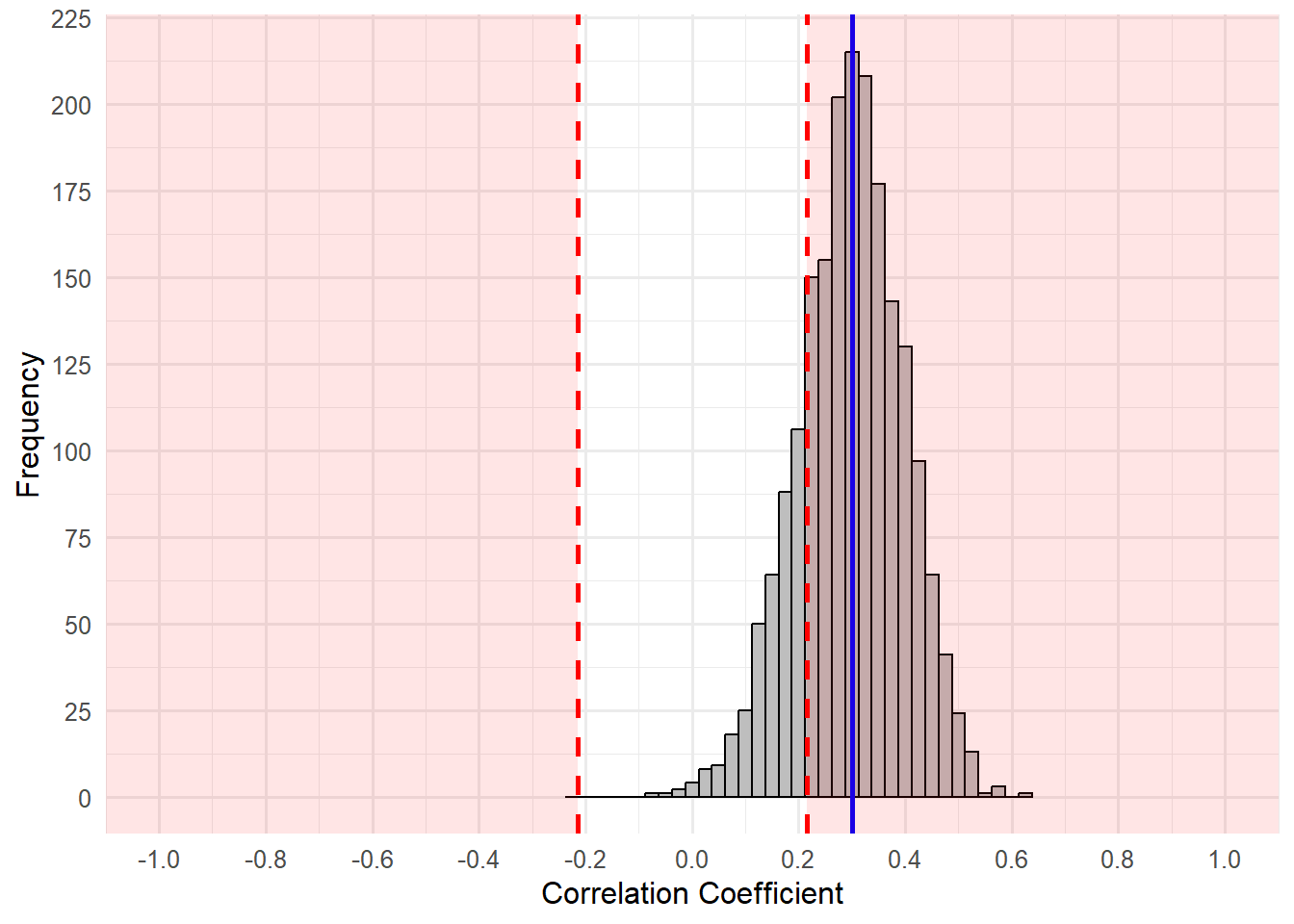

We can create a histogram that plots the results of many random studies (

This graph represents the distribution of correlation coefficients for each of our random samples, which were drawn from our population. Notice how they form a seemingly normal distribution around our true population correlation coefficient,

The red lines and shaded regions represent correlation coefficients that are beyond the

The results of our correlations suggest that 1609 correlation coefficients were at or beyond the critical value. Do you have any guess what proportion of the total samples that was? Recall that power is the probability that any study will have

6.2.1 What if we couldn’t recruit 85 participants?

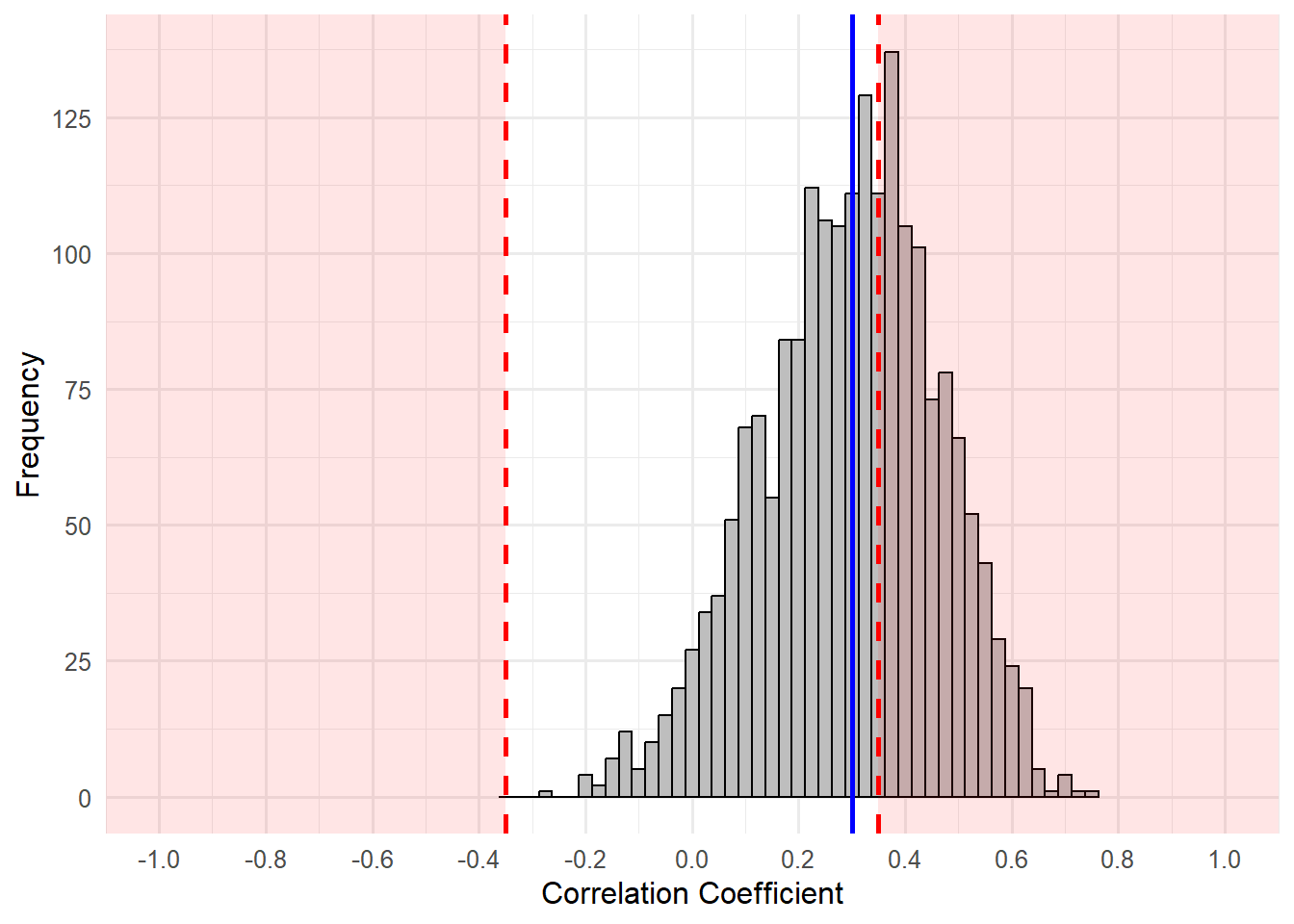

Perhaps we sampled from one high school in a small Canadian city and could only recruit 32 participants. How do you think this would affect our power? First, smaller sample sizes give less precise estimations of population parameters under the alternative distribution (i.e., the histogram above of distribution of sample statistics may be more spread out).

Second, this also influences our critical region for the correlation coefficient, so the red regions shift outward. That is, a lower sample size also increase variability in the distribution of sample statistics for the null distribution, as well. This means that the extreme 5% will be further out. Overall, both of these reduce power.

Let’s rerun our simulation with 2,000 random samples of

Hopefully, you can see that despite the distribution still centering around the population correlation of

Our results suggest that of the 2000 simulated studies, 792 studies yielded statistically significant results (

Formal Power Analysis

Whoa! Power was

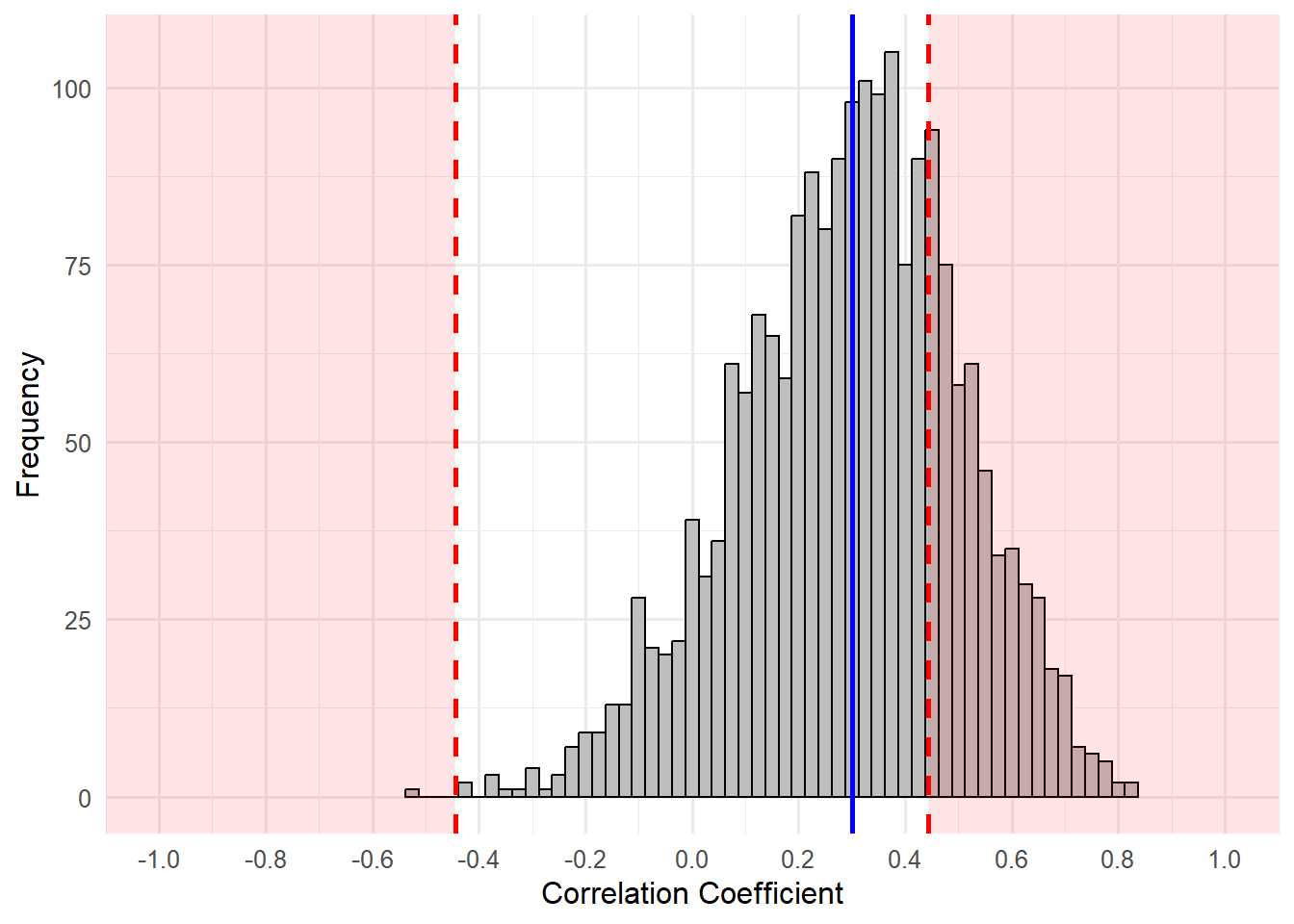

Let’s take it one step further and assume we could only get 20 participants. This is the plot of the resultant correlation coefficients. Note that the critical correlation coefficient value for

Hopefully, you can see that despite the distribution still centering around our population correlation of .3, the red regions are further outward than the previous two examples. Again, let’s see how many studies resulted in

A total of 496 studies yielded statistically significant results (

So, out of 2000 random samples from our population, 25.5% had

In sum, as sample size decreases and all other things are held constant, our power decreases. If your sample is too small, you will be unlikely to reject

6.3 Increasing Power

Power is the function of three components: sample size, hypothesized effect,

- When the hypothesized effect is larger;

- When you can collect more data (increase

- or by increasing your alpha level (i.e., making it less strict).

This relationship can be seen in the following graphs:

Compare the various rhos within a graph.

Also compare the rhos betwen a graph.

6.3.1 Effect Size

As the true population effect reduces in magnitude, your power is also reduced, given constant

If the population correlation was

6.3.1.1 Ways to Estimate Population Effect Size

There are many ways to estimate the population effect size. Here are some common examples, ordered by recommendation:

- Existing Meta-Analysis Results

Meta-analyses compile and combine results from multiple studies to give a more accurate estimate of the population effect size. By aggregating data across various studies, meta-analyses reduce bias and provide a more stable estimate.- Recommendation: Use the lower-bound estimate of the confidence interval (CI) from the meta-analysis. This is a more conservative approach that accounts for uncertainty and publication bias, which helps avoid overestimating the true effect size.

- Why it’s recommended: Meta-analyses offer a robust estimate by synthesizing evidence from multiple studies. Using the lower bound ensures a conservative sample size calculation, reducing the risk of underpowering your study.

- Recommendation: Use the lower-bound estimate of the confidence interval (CI) from the meta-analysis. This is a more conservative approach that accounts for uncertainty and publication bias, which helps avoid overestimating the true effect size.

- Existing Studies with Parameter Estimates

When no meta-analysis is available, individual studies reporting effect size estimates can be used. These should come from studies with similar designs, populations, or theories.- Recommendation: Use the lower-bound estimate of the confidence interval presented in the study or halve the reported effect size from a single study to ensure a more conservative estimate.

- Why it’s recommended: Single studies are more prone to sampling variability and biases like publication bias. By halving the effect size or using the lower bound, you reduce the risk of overestimating the true population effect size.

- Recommendation: Use the lower-bound estimate of the confidence interval presented in the study or halve the reported effect size from a single study to ensure a more conservative estimate.

- Smallest Meaningful Effect Size Based on Theory

Theoretical frameworks can suggest the smallest effect size that would be considered meaningful or practically important in your study. This might come from expert consensus or previous theoretical work that identifies thresholds for meaningful differences.- Recommendation: Choose the smallest effect size that is theoretically or practically significant. This ensures your study has enough power to detect meaningful effects, even if they are small.

- Why it’s recommended: Aligning effect size with theoretical relevance ensures your study detects effects that are practically or theoretically important rather than insignificant.

- Recommendation: Choose the smallest effect size that is theoretically or practically significant. This ensures your study has enough power to detect meaningful effects, even if they are small.

- General Effect Size Determinations (Small, Medium, Large)

If no specific guidance is available, general benchmarks for effect sizes can be used:- Small: Cohen’s d = 0.2, Pearson’s r = 0.1

- Medium: Cohen’s d = 0.5, Pearson’s r = 0.3

- Large: Cohen’s d = 0.8, Pearson’s r = 0.5

- Recommendation: Choose the general effect size that aligns best with your theory. If a strong relationship is expected, use a larger effect size; if subtle, use a smaller one.

- Why it’s recommended: These general benchmarks provide a starting point when no other data is available. They help design studies with realistic expectations for effect sizes, even without prior research.

- Small: Cohen’s d = 0.2, Pearson’s r = 0.1

6.3.2

Recall that the alpha level (commonly set at 0.05) represents the threshold we choose to determine statistical significance. Specifically, it is the probability of committing a Type I error — rejecting the null hypothesis when it is actually true. In other words, it defines the “extreme” region of our null distribution, where we would reject the null hypothesis in favor of the alternative hypothesis.

6.3.2.1 Reducing Alpha and Its Consequences

When we reduce our alpha level, we are essentially making our criterion for rejecting the null hypothesis more strict. This means that fewer observed outcomes will fall into the “extreme” region, which is now smaller. Visually, this reduction in alpha shrinks the size of the red areas in a power curve (those areas representing where we reject the null hypothesis under the alternative distribution). These areas are associated with detecting true effects, so shrinking them decreases the likelihood of finding a significant result when an effect is present.

Increased Threshold for “Extreme” Results: Lowering alpha increases the critical value (e.g., a larger correlation coefficient or test statistic would be needed to reject the null hypothesis). In the case of correlation tests, this results in a larger correlation coefficient threshold that we consider “extreme” enough to indicate significance. Essentially, we are raising the bar for what qualifies as a significant result.

Decreased Statistical Power: Holding all other factors constant, reducing the alpha level will decrease the power of the test. Power refers to the probability of correctly rejecting the null hypothesis when it is false (i.e., detecting a true effect). As the threshold for significance becomes stricter, it becomes harder to detect effects because the criterion for rejection is less lenient. Consequently, more true effects may go undetected, increasing the risk of committing a Type II error (failing to reject a false null hypothesis).

6.3.2.2 Trade-offs in Reducing Alpha

Reducing alpha is often done to minimize the risk of a Type I error, but this comes with trade-offs. While a stricter alpha reduces the probability of falsely rejecting the null hypothesis, it also reduces the power of your test, making it harder to detect true effects. Therefore, when designing a study, it’s important to carefully balance the chosen alpha level with the desired power, especially when small effects are being studied.

For instance: - If alpha is set too low (e.g., .01 instead of the conventional .05), the critical value becomes much larger, and your study may require a larger sample size to maintain sufficient power. - If alpha is too lenient (e.g., .10), the power may increase, but this comes at the expense of a higher likelihood of committing a Type I error, which could undermine the validity of your findings.

Thus, reducing alpha lowers the probability of Type I errors but also decreases the test’s power, making it more difficult to detect real effects. This trade-off must be carefully considered during the study design phase, particularly in studies where detecting small but meaningful effects is crucial.

6.4 Afterward

This section will focus on conducting power analysis across various statistical platforms.

6.4.1 Power in R

We will focus on two packages for conducting power analysis: pwr and pwr2.

6.4.1.1 Correlation

Correlation power analysis has four pieces of information. You need any three to calculate the other:

nis the sample sizeris the population effect,sig.levelis you alpha levelpoweris power

So, if we wanted to know the required sample size to achieve a power of .8, with a alpha of .05 and hypothesized population correlation of .25:

6.4.1.2 t-test

With same sized groups we use pwr.t.test. We now need to specify the type as one of ‘two.sample’, ‘one.sample’, or ‘paired’ (repeated measures). You can also specify the alternative hypothesis as ‘two.sided’, ‘less’, or ‘greater’. The function defaults to a two sampled t-test with a two-sided alternative hypothesis. It uses Cohen’s d population effect size estimate (in the following example I estimate population effect to be

6.4.1.3 One way ANOVA

One way requires Cohen’s F effect size, which is kind of like the average Cohen’s d across all conditions. Because it is more common for researchers to use

Cohen’s F

pwr.anova.test() requires the following:

k= number of groupsf= Cohen’s Fsig.levelis alpha, defaults to .05poweris your desired power

6.4.2 Alternatives for Power Calculation

6.4.2.1 G*Power

You can download here.

6.4.2.2 Simulations

Simulations can be run for typical designs, which you have seen above through our own simulations to demonstrate the general idea of power. For example, we can repeatedly run a t-test on two groups with a specific effect size at the population level. Knowing that Cohen’s d is:

We can use rnorm() to specify two groups where the difference in means is equal to Cohen’s d and when we keep the SD of both groups to 1.

We can use various R capabilities to simulate 10,000 simulations and determine the proportion of studies that conclude that

This returned a data.frame will 10,000 pvalues from simulations. The results suggest that 2360 samples were statistically significant, indicating 23.6% were statistically significant.

You may be thinking, why do this when I have pwr.t.test? Well, the rationale for more complex designs is the same. For more complicated designs, it can be difficult to determine the best power calculation to use (e.g., imagine a 4x4x4x3 ANOVA or a SEM). Sometimes it makes sense to run a simulation.

Simulation of SEM in R, which can help with power analysis.

Companion shiny app regarding statistical power can be found here.

6.5 Recommended Readings:

- Cohen, J. (1994). The Earth is round (p < .05).American Psychologist, 49, 997-1003.

- Nickerson, R. S. (2000). Null hypothesis significance testing: A review of an old and continuing controversy. Psychological Methods, 5(2), 241–301. https://doi.org/10.1037/1082-989x.5.2.241

- Pritchard, T. R. (2021). Visualizing Power.