| Person | Age |

|---|---|

| 1 | 20 |

| 2 | 19 |

| 3 | 23 |

| 4 | 22 |

| 5 | 19 |

3 Descriptive and Inferential Statistics

3.1 Descriptive Statistics

Descriptive statistics involve the use of numerical and graphical methods to summarize and present data in a meaningful way. Descriptive statistics focus on describing and summarizing the main features of a dataset. The primary goal is to simplify large amounts of data. This can help researchers, clinicians, and public understand it.

Key aspects of descriptive statistics include measures of central tendency and variability. We will also briefly visit figures/graphical representations of data.

3.1.1 Measures of Central Tendency

Imagine we measure five university students’ ages and get the following data:

3.1.1.1 Mean (Average)

The mean is one way we can understand the ‘average’ score of participants. That is, the value that best shows around where partcipants fall. The mean is the sum of all values divided by the number of observations:

For our hypothetical data above:

There are several benefits of using the mean. First, the mean takes into account every data point in the dataset, making it sensitive to changes in any value. Second, the mean has convenient mathematical properties, making it suitable for various statistical analyses.

The major downside to using the mean is that it is highly sensitive to extreme values (outliers). Having a few outliers can drastically skew the result. Consider adding one extreme value to our hypothetical data: a person of age 68. Our new mean would be:

You can see how drastic the change is (20.6 compared to 28.5). Indeed, the mean is higher than all but one of our participants’ ages. As another example, consider people’s salaries. There are 10 salaries close to the Canadian average, and one outlier.

| Salary |

|---|

| 75010 |

| 33673 |

| 40710 |

| 27427 |

| 57689 |

| 50474 |

| 54748 |

| 91066 |

| 46828 |

| 38751 |

| 525000 |

Calculate the mean with and without the outlier.

Mean with: $94,670.55

Mean without: $51,637.60

As you can see, the mean with is WAY higher than the mean without with one outlier. Imagine the answer to the question, ‘what is the average Canadian salary?’. How might you answer?

3.1.1.2 Median

The median is the middle value in a dataset when the data are ordered from least to greatest. It represents the 50th percentile of the data, meaning half of the values fall below it and half above. If the dataset has an even number of observations, the median is calculated by taking the mean of the two middle values (the same way we would calculate mean from above).

One advantage of the median is that it is not influenced by extreme values, making it a robust measure of central tendency, especially for skewed distributions or those with outliers.

A drawback is that it only considers the order of values, ignoring the actual numerical differences between them, which means it doesn’t capture information about the magnitude of changes in the dataset.

When calculating the median by hand, it’s helpful to first order the values from smallest to largest. For example, in the ordered set

As an example with even number of observations:

3.1.1.3 Mode

The mode is the most frequently occurring value in a dataset. It is particularly useful for nominal data, where there is no inherent order to the values, and it is also easy to understand and calculate.

However, one limitation is that a dataset may have no mode. If each values occurs the same amount of time (e.g., each value only occurs once), one best determine an alternative form of central tendancy (median or mean). It is also possible to have multiple modes, in which case the distribution is described as multimodal. Additionally, the mode may not provide an accurate representation of the center of a distribution, especially in datasets with a continuous or particularly skewed range of values.

For example, in the dataset provided, the mode is 45, meaning it occurs more frequently than any other value.

3.1.2 Variability

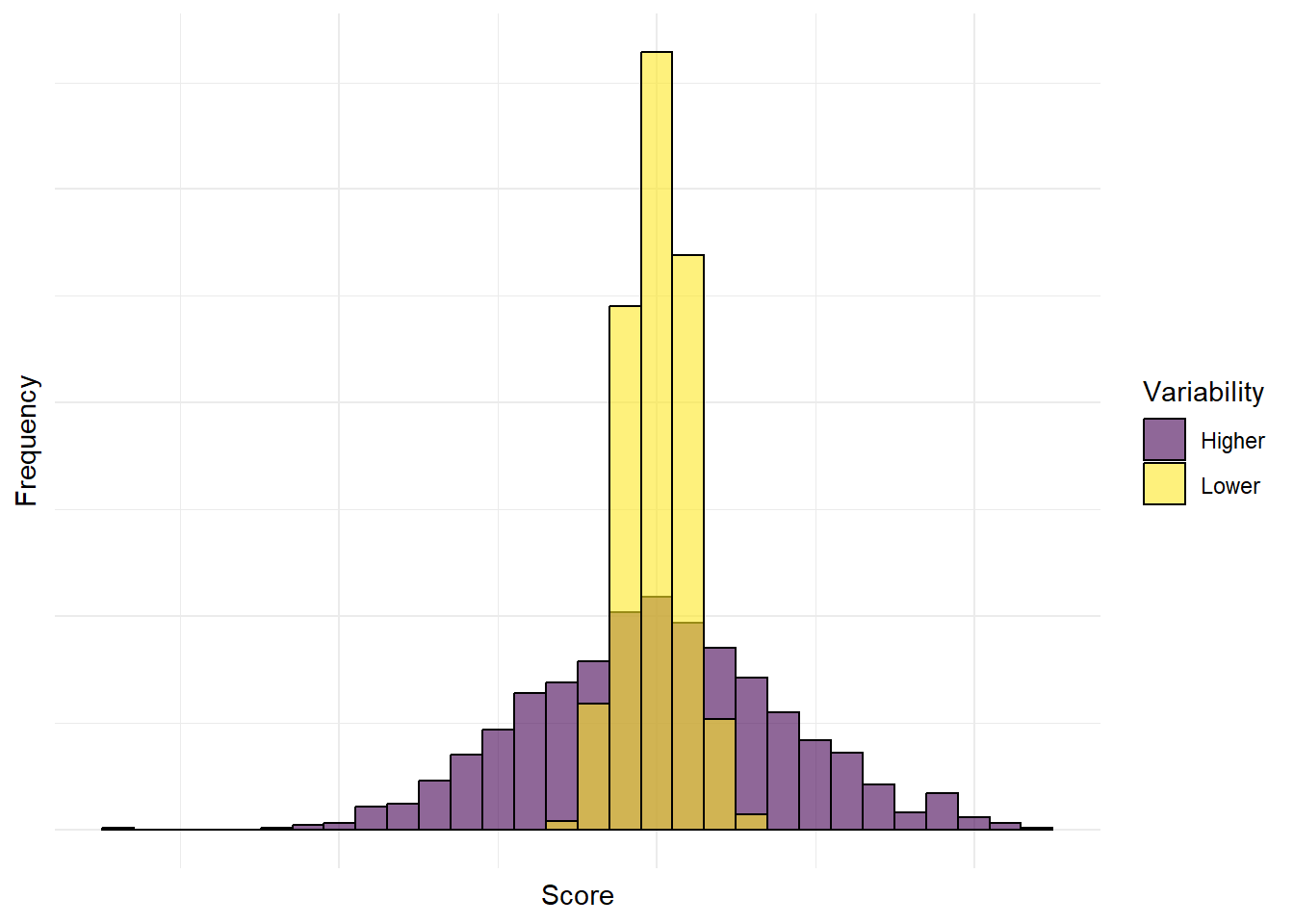

Variability refers to how spread out or dispersed the values in a dataset are. It gives an indication of how much the data points differ from each other and from the central tendency (e.g., the mean or median). High variability means the data points are spread widely apart, while low variability indicates they are clustered closely together.

Variability in data:

In the above, you can tell that the purple distribution is much more spread out than the yellow distribution. The yellow group is more closely grouped towards the center and mean of their group, whereas the purple group is more spread out. Said another way, the average distance between any score and their group’s mean is much smaller for the yelloe group compared to the purple group.

3.1.2.1 Range

The range represents the difference between the minimum and maximum values of a variable in a dataset. It is a quick way to determine the variability in scores and allows us to identify outliers or other extreme scores.

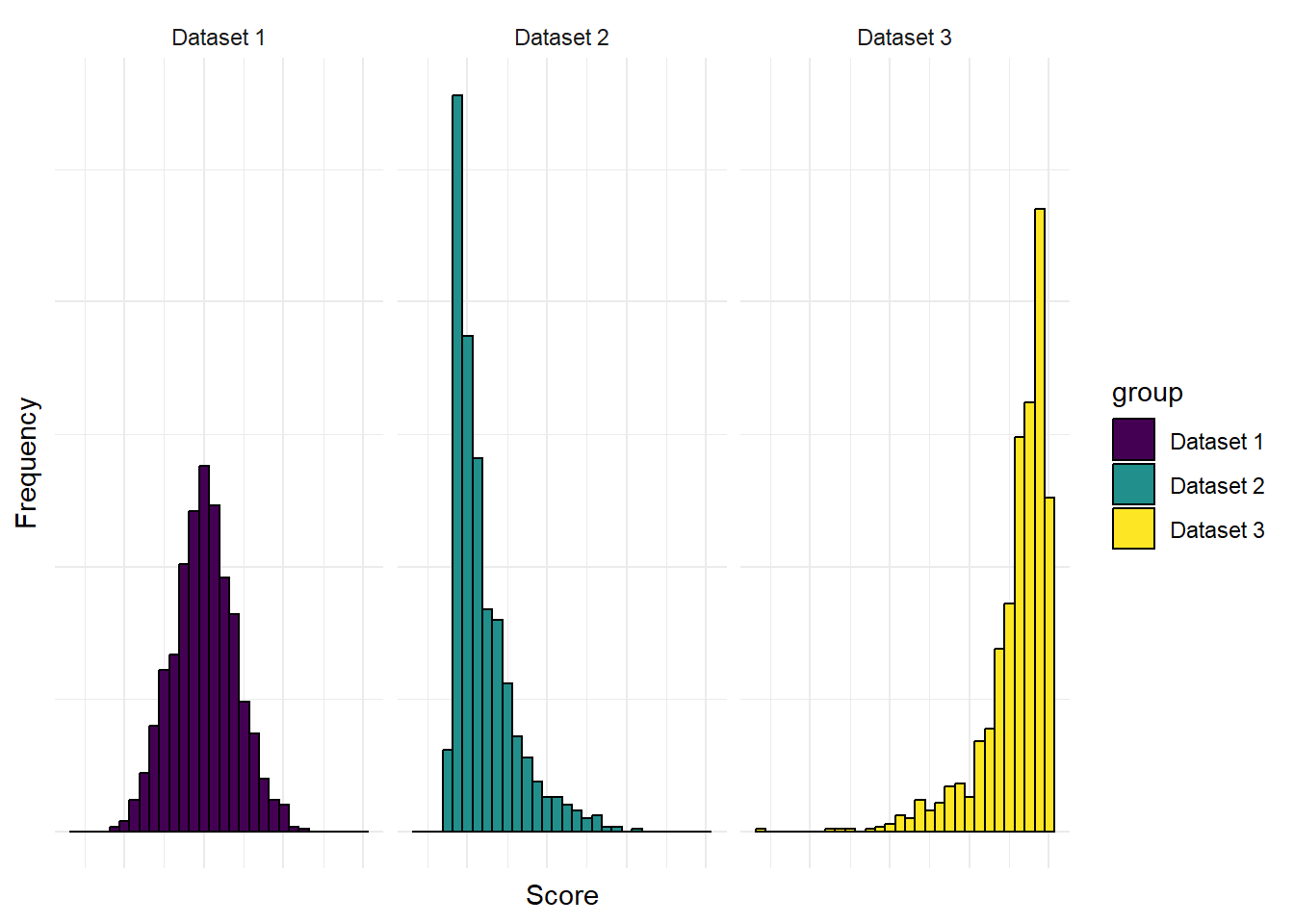

However, range tells nothing about the type of distribution of the data you are describing. For exame, all of the following have the same range:

For our hypothetical age data above, the min value is 20 and the max value is 45. Thus, the range is:

3.1.2.2 Variance

Variance quantifies the spread of data points in a dataset. It is the average squared differences from the mean. It indicates how much individual data points deviate from the mean, with a higher variance reflecting greater variability.

Variance is widely used assess variability, identify trends, and predict outcomes. For instance, in psychological research, variance helps measure individual differences or treatment effects across groups.

One of its strengths is that it considers all data points in the dataset, offering a great measure of variability. However, variance is sensitive to outliers, which can distort the results. This is particularly problematic for small data sets. Additionally, since variance is expressed in squared units, it can be difficult to interpret in practical terms. To address this, the square root of variance, known as the standard deviation, is often used for better interpretability.

Variance is calculated as:

Where:

The above is the population variance. The sample variance, which you will likely use in your psychological research is:

See another chapter (CHAPTER HERE) for why we subtract from

For our data:

3.1.2.3 Standard Deviation

Standard deviation is used to quantifies the amount of variation or dispersion in a dataset. It is the square root of variance, making it a more interpretable measure as it is expressed in the same units as the data. Standard deviation is useful to help researchers understand how spread out scores are around the mean, such as in assessing the variability of test scores or behavioral responses. A low standard deviation indicates that data points are close to the mean, while a high standard deviation shows that scores have more variability.

One key advantage of standard deviation is its intuitive interpretability, especially compared to variance. It gives a clear sense of how much data points deviate from the average. However, like variance, standard deviation is sensitive to outliers, which can inflate its value (i.e., make it higher). Additionally, it assumes a normal distribution of data, so it may not fully capture variability in skewed datasets or those with non-normal distributions.

The formula for population and sample standard deviation can easily be calculated as the square root of population and sample variance, respectively. Or:

Population:

Sample:

For our data:

3.1.3 Graphical Representations

3.1.3.1 Histograms

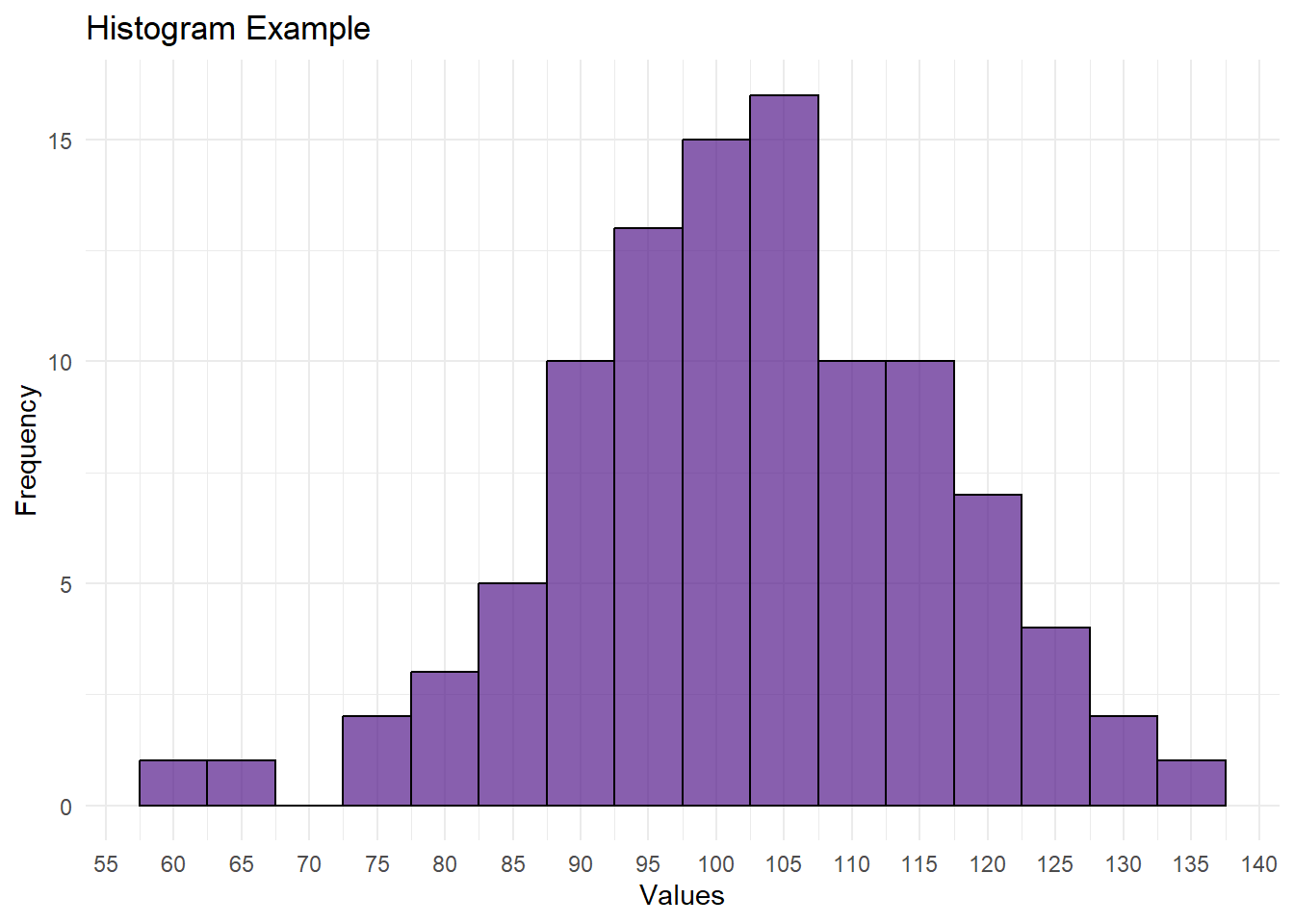

A visual representation of the distribution of a dataset, showing the frequency of different ranges of values.

To read a histogram, start by examining the x-axis, which represents the range of values in the dataset. The data is divided into intervals or bins along the x-axis, and the y-axis displays the frequency or count of observations within each bin.

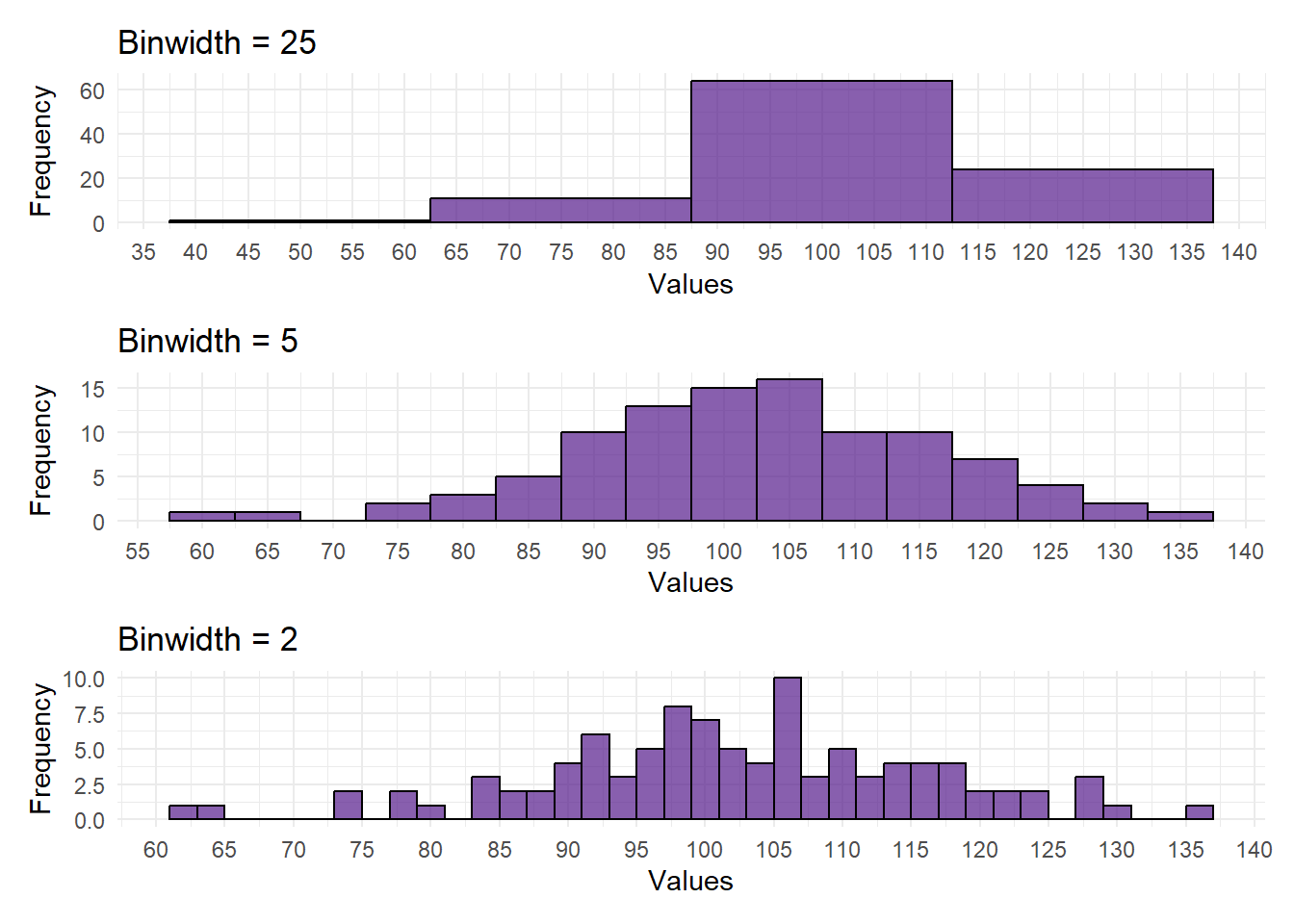

The height of each bar corresponds to the number of data points falling within that bin. A key aspect is the width of the bins, as it influences the visual interpretation. A narrower bin width can reveal finer details in the distribution, while a broader bin width may smooth out fluctuations. As an example, consider the following three histograms, all with the same data, but differing bin widths:

Additionally, the overall shape of the histogram indicates the data’s central tendency, spread, and symmetry. Peaks and valleys highlight regions of higher or lower frequency, and the tails provide information about outliers or other extreme values.

3.1.3.2 Box plots

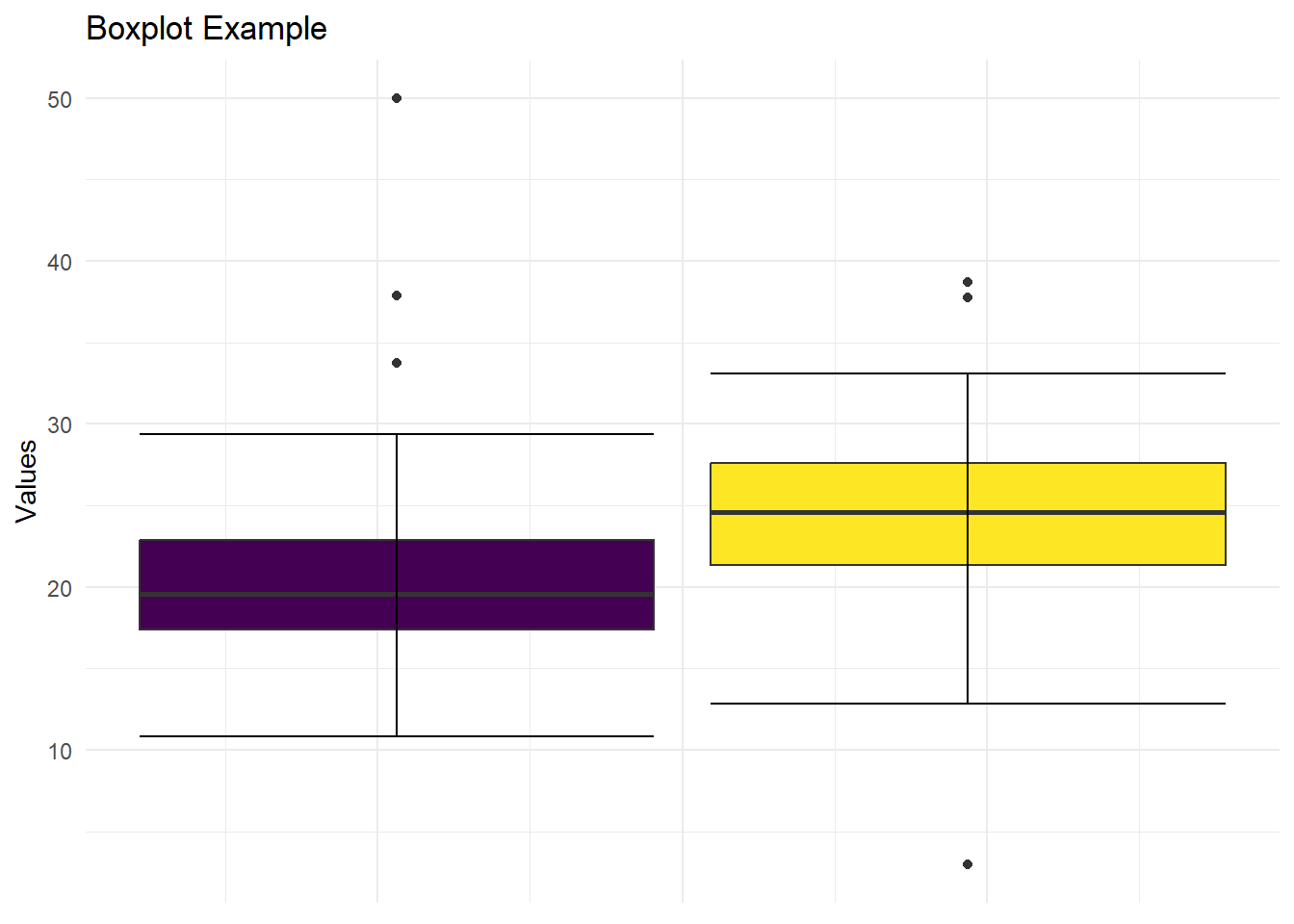

Box plots are graphical summaries of the distribution of a variable in a dataset, including the median, quartiles, and potential outliers. The following box plot represents data from two groups. The left box represents one group, the right box the the other.

Interpreting a Boxplot

First, the box–in our figure there are two coloured boxes–represents the middle 50% of the data, known as the interquartile range (IQR). The lower (Q1) and upper (Q3) edges of the box correspond to the 25th and 75th percentiles, respectively.

Second, the line inside the box represents the median. You have already read about what the median is, its benefits and downsides.

Third, the whiskers extend from the box to the minimum and maximum values within a certain range. The length of the whiskers can vary. There are several equations that may be used. It is common for any data outside of the whiskers to be considered extreme or as outliers.

Fourth, outliers are individual data points that fall significantly outside the typical range of the data. They are often plotted as individual points or dots.

Boxplots are a great way to visualize a distibution and can inform viewers on the nuances of the data not undestood through a histogram.

3.1.3.3 Scatter plots

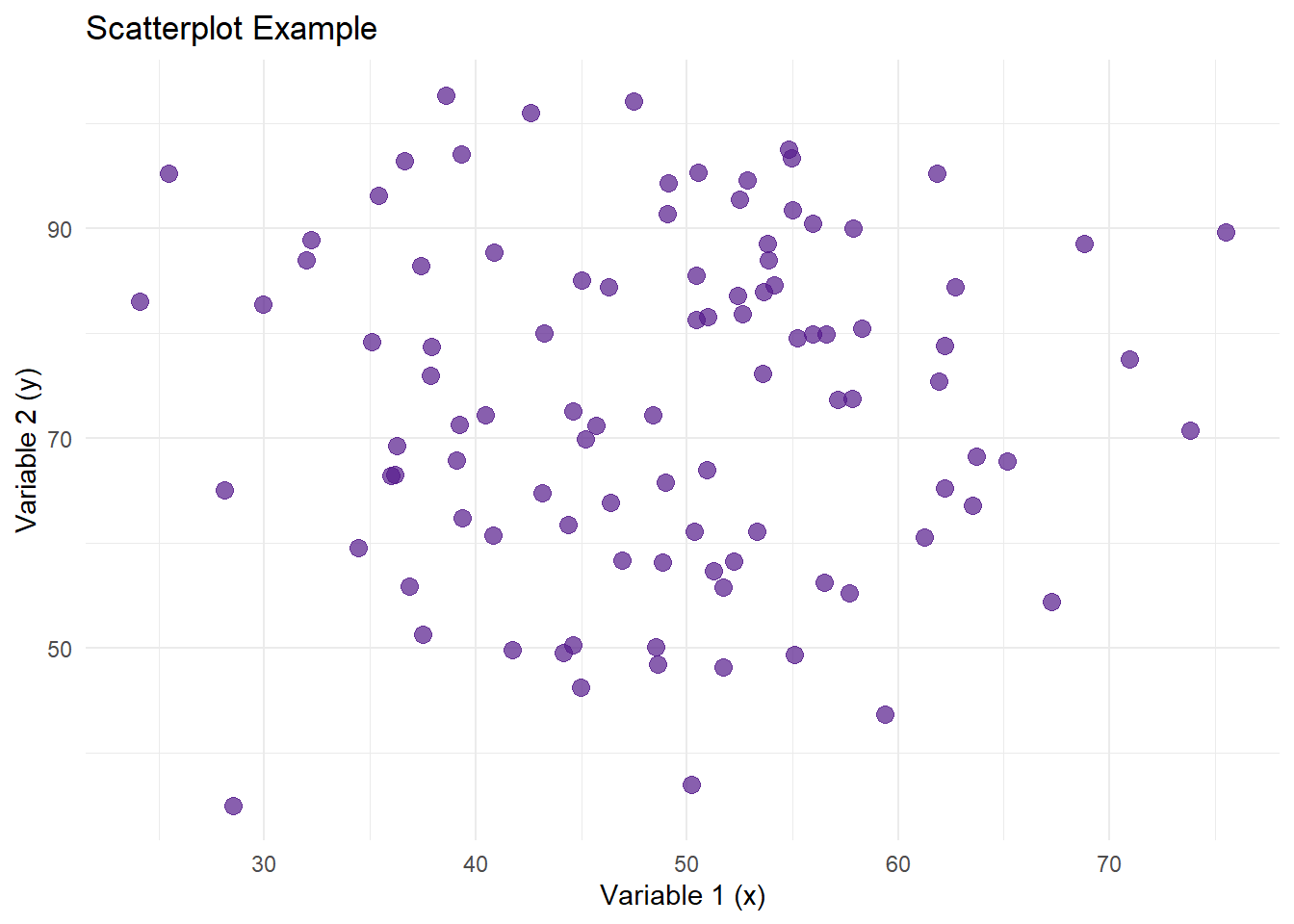

Scatterplot display the relationship between two variables in a two-dimensional space.

Scatterplots serve as powerful tools for exploring the relationship between two variables in a dataset. The direction in which points trend across the two-dimensional plane, whether upward (i.e., dots get higher as you look from lef tot right) or downward (i.e., dots ger lower as you look from left to right), offers immediate insights into the association between the variables.

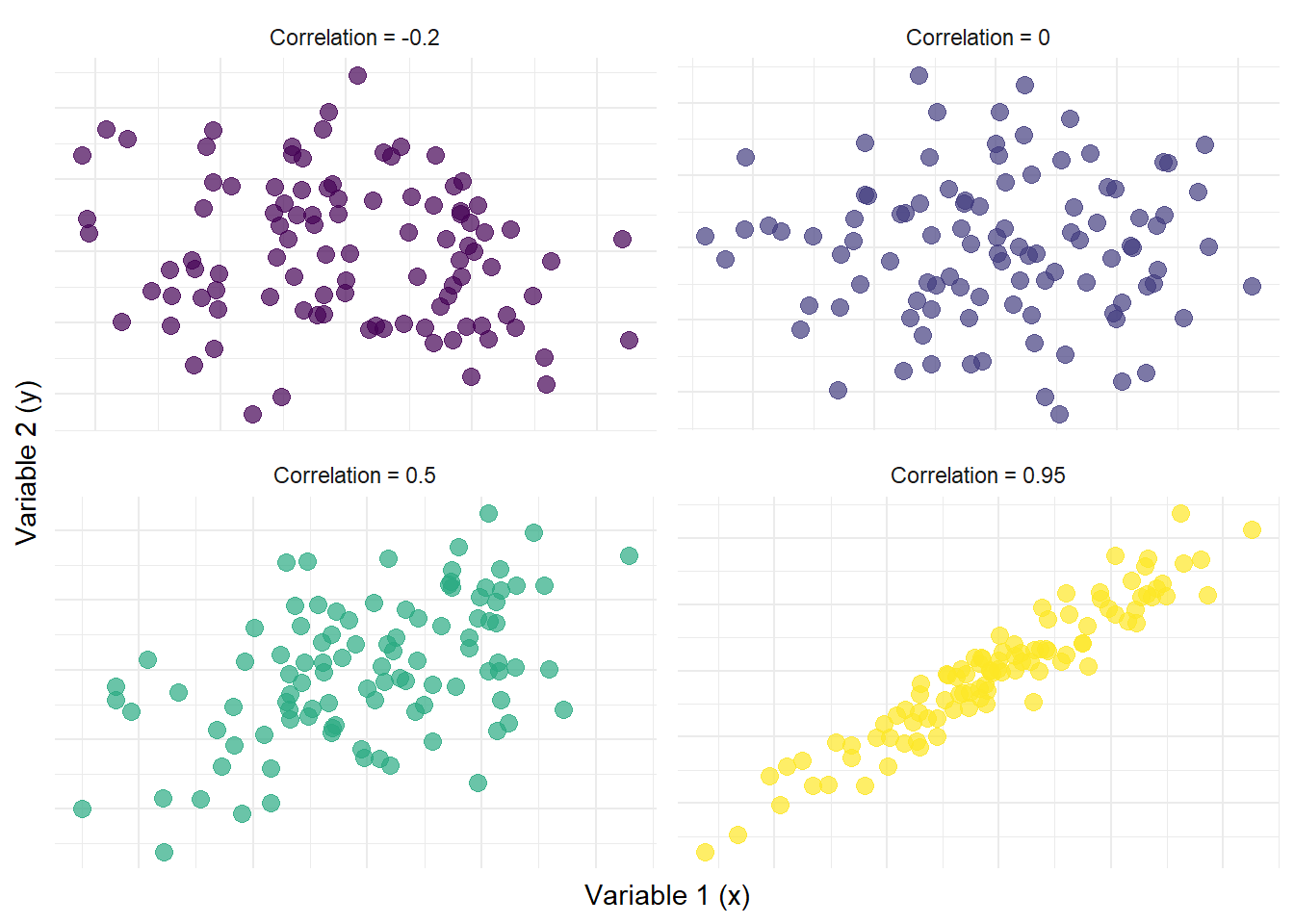

As the relationship between two variables increases, the points will converge to a line. If the correlation between two variables is exactly -1.0 or 1.0, all dots will fall on a straight line.

Descriptive statistics provide a concise summary of the main features of a dataset, aiding in the interpretation and communication of data patterns. These statistics are fundamental for understanding the characteristics of a dataset before applying more advanced statistical analyses or drawing conclusions based on the data.

3.2 Inferential Statistics

Our theories and hypotheses are typically assumed to apply to a specific population (e.g., EVERYONE, men, university students). Sampling from a population is essential in research because studying an entire population is often impractical due to limitations in time, cost, and accessibility. For example, in large populations, such as all residents of a country or all patients with a particular medical condition, it is usually impossible to gather data from every individual. A sample, or a subset of the population, allows researchers to draw meaningful conclusions about the whole population while conserving resources. However, the challenge lies in selecting a sample that accurately represents the population to ensure the findings can be generalized.

A sample is a subset of the population that will be studied, which allows researchers to draw meaningful conclusions about the whole population.

To ensure that a sample is unbiased, researchers use several strategies. Random sampling is one of the most reliable methods, where each individual in the population has an equal chance of being chosen. This reduces the likelihood of selection bias and ensures that the sample mirrors the diversity of the population. For instance, if studying a town’s attitudes towards healthcare, random sampling would give each resident an equal chance of being selected, regardless of age, gender, or socioeconomic status. Here, the sample should not be systematically different from the population of interest.

Another approach is stratified sampling, where the population is divided into subgroups or strata based on specific characteristics (such as age, gender, or income). From each subgroup, random samples are then drawn. This method is particularly useful when researchers want to ensure that all key groups within the population are represented in the sample. For example, in a study of high school students’ academic performance, stratified sampling might involve dividing students by grade level and then randomly selecting a proportional number from each grade.

Systematic sampling offers a structured yet efficient way of selecting a sample. In this method, every

Last, cluster sampling involves dividing the population into groups or clusters, such as geographic regions or schools, and then randomly selecting entire clusters for study. This method is particularly helpful when the population is large or spread out over a wide area, as it simplifies the data collection process. However, it may introduce bias if the selected clusters systematically differ from the population. When this is the case, the sample will not be representative of the entire population.

Sampling from a population is vital for making research manageable and cost-effective. Using methods like random sampling, stratified sampling, systematic sampling, and cluster sampling helps ensure that the sample is unbiased and representative, allowing researchers to confidently generalize their findings to the broader population. Deciding which method is best for you will depend on your time, money, population of interest, and research question.

After we identify a suitable sample, we collect data from that sample. As discussed, a major requirement is that the sample is representative of the population of interest. It is imperative to inferential statistics that this is the case. Specifically, the sample should share the same characteristics as the population of interest.

After data collection, we can analyse it according to our pre-specified plan. The reaults can be written up. Importantly, once we analyze our data using the sample, we assume the results generalize to the entire population. We infer about the population based on research using samples. This is inferential statistics.

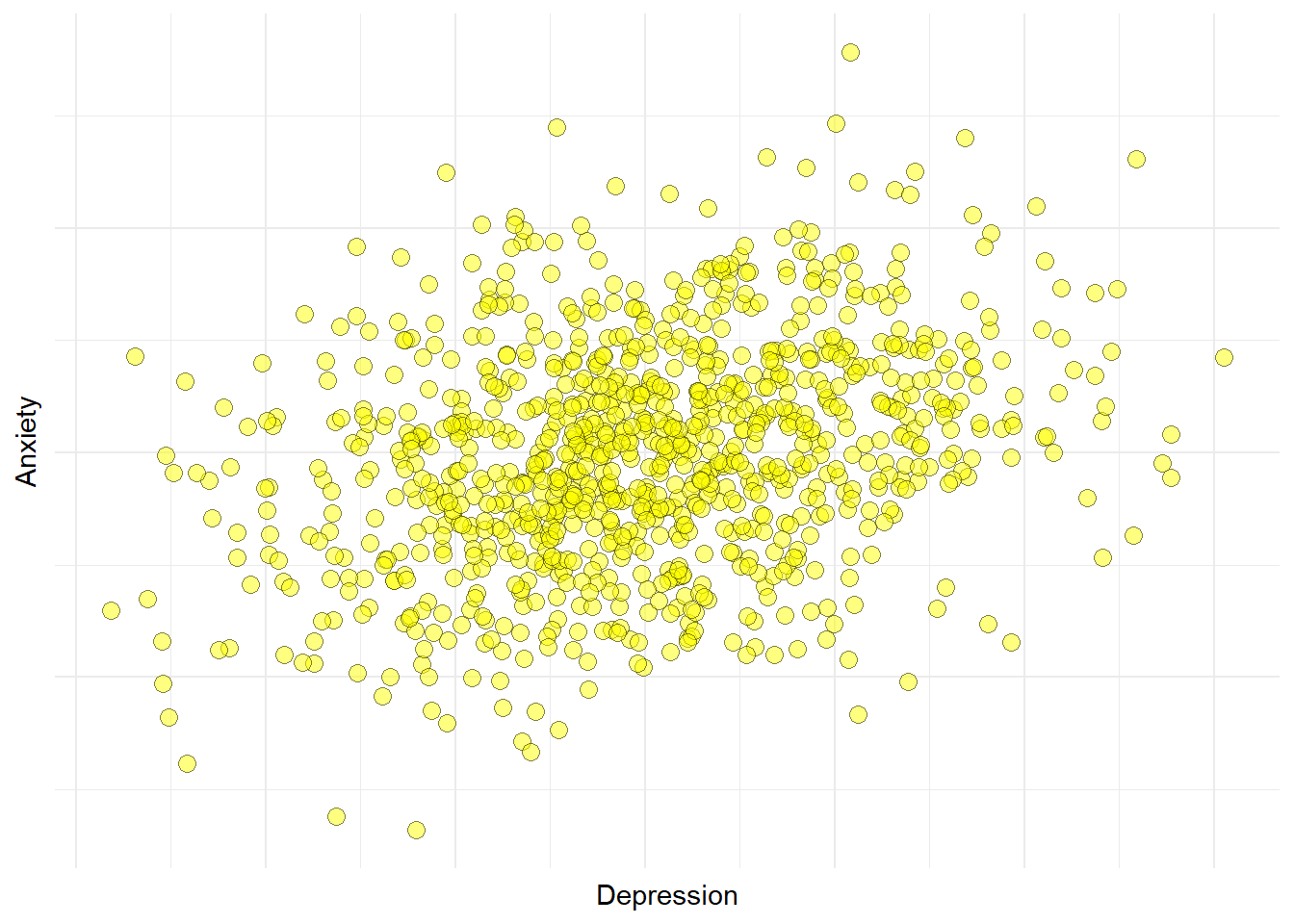

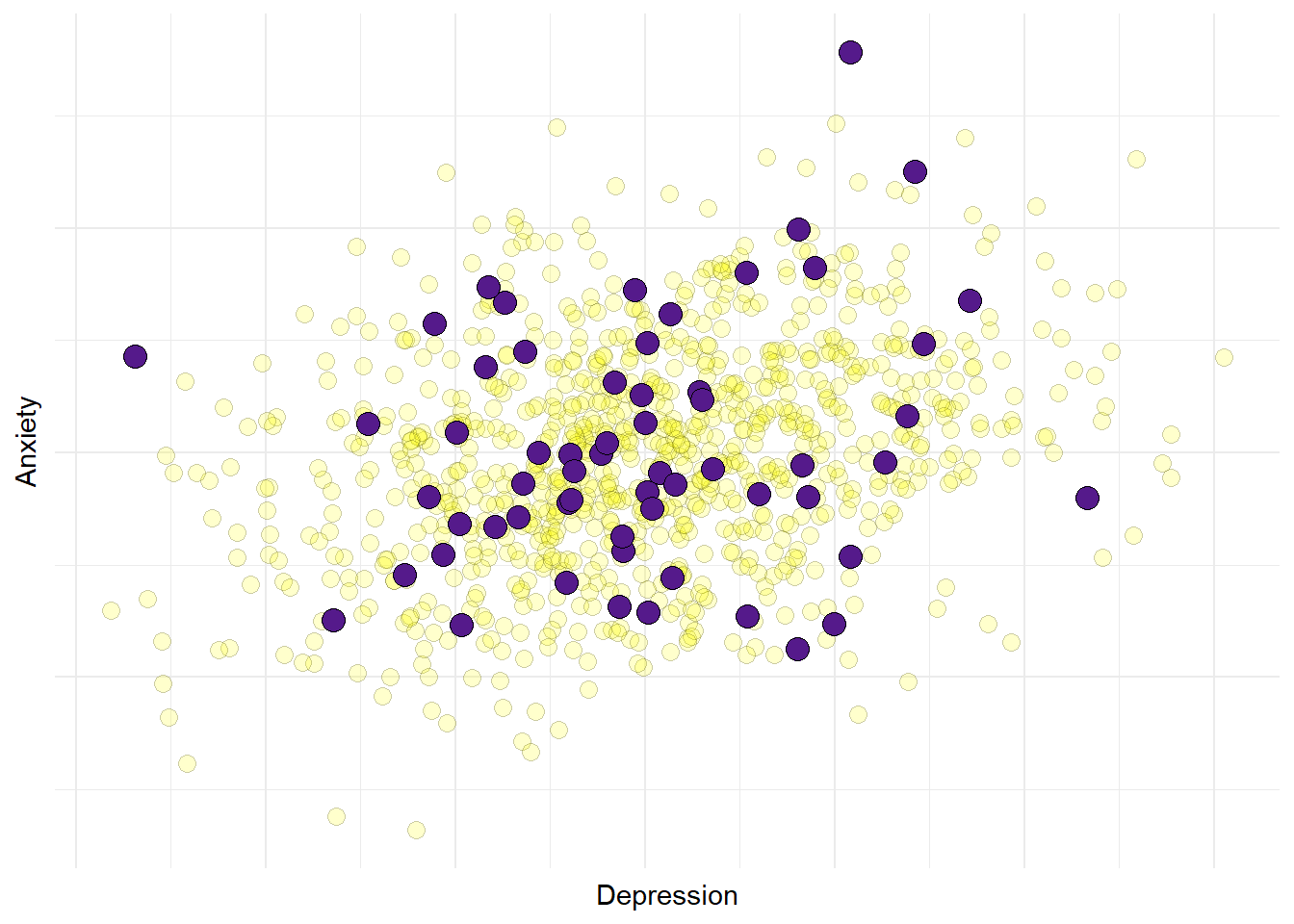

For example, imagine we are interested in understanding the link between depression and anxiety in Grenfell Students (our population). Hypothetically, the following figure represents all Grenfell Students.

We don’t have the time or money to sample all students. Perhaps we sample for two full months and end up collecting data from 100 of these individuals. These 100 individuals are our sample. Their specific data can be viewed below (indicated by dark purple).

We would conduct analysis with the sample and infer that they generalize to the population! The following chapters outline the various analyses we can use to make population-based inferences fro samples of data. Each analysis has its time and place, which will largely depend on the research question and hypotheses of interest. Remember, the research question determines the method, not the other way around.

How can inferential statistics help psychologists draw meaningful conclusions from data, beyond just describing the sample at hand?

Can inferential statistics be misinterpreted or misused, and what steps can psychologists take to ensure the accuracy and validity of their statistical inferences?

What challenges and opportunities arise when applying inferential statistics to complex psychological phenomena, such as emotions, cognition, or interpersonal relationships?

How do cultural and contextual factors impact the appropriateness and interpretation of inferential statistics in cross-cultural psychological research?