| track_artist | track_name | Popularity | Valence | Danceability |

|---|---|---|---|---|

| Camila Cabello | My Oh My (feat. DaBaby) | 208 | 13 | 2 |

| Tyga | Ayy Macarena | 95 | 17 | 7 |

| Maroon 5 | Memories | 200 | 23 | 4 |

| Harry Styles | Adore You | 62 | 9 | 9 |

| Sam Smith | How Do You Sleep? | 189 | 22 | 4 |

| Tones and I | Dance Monkey | 87 | 19 | 7 |

| Lil Uzi Vert | Futsal Shuffle 2020 | 140 | 1 | 5 |

| J Balvin | LA CANCIÓN | 144 | 14 | 5 |

| Billie Eilish | bad guy | 129 | 6 | 7 |

| Dan + Shay | 10,000 Hours (with Justin Bieber) | 141 | 8 | 5 |

| Regard | Ride It | 191 | 13 | 3 |

| Billie Eilish | bad guy | 175 | 19 | 5 |

| The Weeknd | Heartless | 126 | 13 | 4 |

| Y2K | Lalala | 112 | 18 | 5 |

| Future | Life Is Good (feat. Drake) | 36 | 16 | 8 |

| Lewis Capaldi | Someone You Loved | 76 | 17 | 6 |

| Anuel AA | China | 185 | 22 | 4 |

| Regard | Ride It | 162 | 17 | 5 |

| Dua Lipa | Don't Start Now | 144 | 7 | 4 |

| Anuel AA | China | 144 | 12 | 5 |

| Regard | Ride It | 133 | 13 | 5 |

| Bad Bunny | Vete | 107 | 13 | 6 |

| Roddy Ricch | The Box | 142 | 7 | 2 |

| Juice WRLD | Bandit (with YoungBoy Never Broke Again) | 109 | 12 | 7 |

| Roddy Ricch | The Box | 247 | 25 | 4 |

| Regard | Ride It | 133 | 14 | 5 |

| Trevor Daniel | Falling | 149 | 11 | 4 |

| Anuel AA | China | 190 | 15 | 3 |

| Shawn Mendes | Señorita | 140 | 8 | 4 |

| Travis Scott | HIGHEST IN THE ROOM | 186 | 10 | 3 |

| Juice WRLD | Bandit (with YoungBoy Never Broke Again) | 164 | 21 | 4 |

| Camila Cabello | My Oh My (feat. DaBaby) | 135 | 22 | 6 |

| Sam Smith | How Do You Sleep? | 113 | 12 | 5 |

| Harry Styles | Adore You | 129 | 10 | 4 |

| Don Toliver | No Idea | 53 | 20 | 7 |

| Billie Eilish | everything i wanted | 133 | 20 | 5 |

| Lil Uzi Vert | Futsal Shuffle 2020 | 65 | 21 | 7 |

| DaBaby | BOP | 111 | 16 | 5 |

| Lil Uzi Vert | Futsal Shuffle 2020 | 18 | 23 | 8 |

| blackbear | hot girl bummer | 166 | 17 | 4 |

| Tones and I | Dance Monkey | 198 | 13 | 2 |

| Tyga | Ayy Macarena | 87 | 13 | 6 |

| Selena Gomez | Lose You To Love Me | 113 | 11 | 5 |

| Dalex | Hola - Remix | 106 | 15 | 5 |

| The Black Eyed Peas | RITMO (Bad Boys For Life) | 100 | 11 | 8 |

| Arizona Zervas | ROXANNE | 116 | 11 | 6 |

| The Black Eyed Peas | RITMO (Bad Boys For Life) | 111 | 4 | 6 |

| Arizona Zervas | ROXANNE | 101 | 14 | 6 |

| Roddy Ricch | The Box | 57 | 13 | 8 |

| MEDUZA | Lose Control | 119 | 23 | 6 |

18 Multiple Regression

This chapter will cover multiple regression, a statistical method used to examine the relationship between a dependent (or outcome/criterion) variable and multiple independent (predictor) variables. Unlike simple regression, which involves only one predictor, multiple regression allows researchers to assess the combined influence of several predictors on an outcome. But why do researchers need multiple predictors?

Using multiple predictors in regression allows researchers to better understand complex relationships between variables. Real-world outcomes are rarely influenced by a single factor; instead, they result from multiple interacting influences. By including multiple predictors, we can control for confounding variables, improve the accuracy of our predictions, and gain a more comprehensive understanding of how different factors contribute to an outcome.

18.1 Some Additional Details

Multiple regression is useful in situations where we expect multiple factors to influence an outcome. For example, a researcher might want to predict job performance based on cognitive ability, motivation, and job experience.

The general form of the multiple regression equation is:

where:

18.1.1 Key Assumptions

Like all of our analyses thus far, a multiple regression analysis is valid model under the following assumptions (many we have already explored):

1. Linearity

The relationship between each predictor and the dependent variable should be linear.

2. Independence of Errors

Observations should be independent, and errors should not be correlated.

3. Homoscedasticity

The variance of errors should be constant across all levels of the independent variables.

4. Normality of Residuals

The residuals (errors) should be normally distributed.

5. No Multicollinearity

Predictor variables should not be highly correlated with one another. More to come.

Prior to further exploring our hypotheses and conducting a formal analysis, an explanation of various types of correlations is needed. Correlations help us understand the relationships between variables and are particularly important in multiple regression, where we assess the contribution of multiple predictors to an outcome variable.

18.2 Types of Correlations

18.2.1 Pearson Correlation Coefficient

We have already explored correlation,

where:

- The numerator represents the covariance between

- The denominator standardizes the covariance by dividing by the product of the standard deviations.

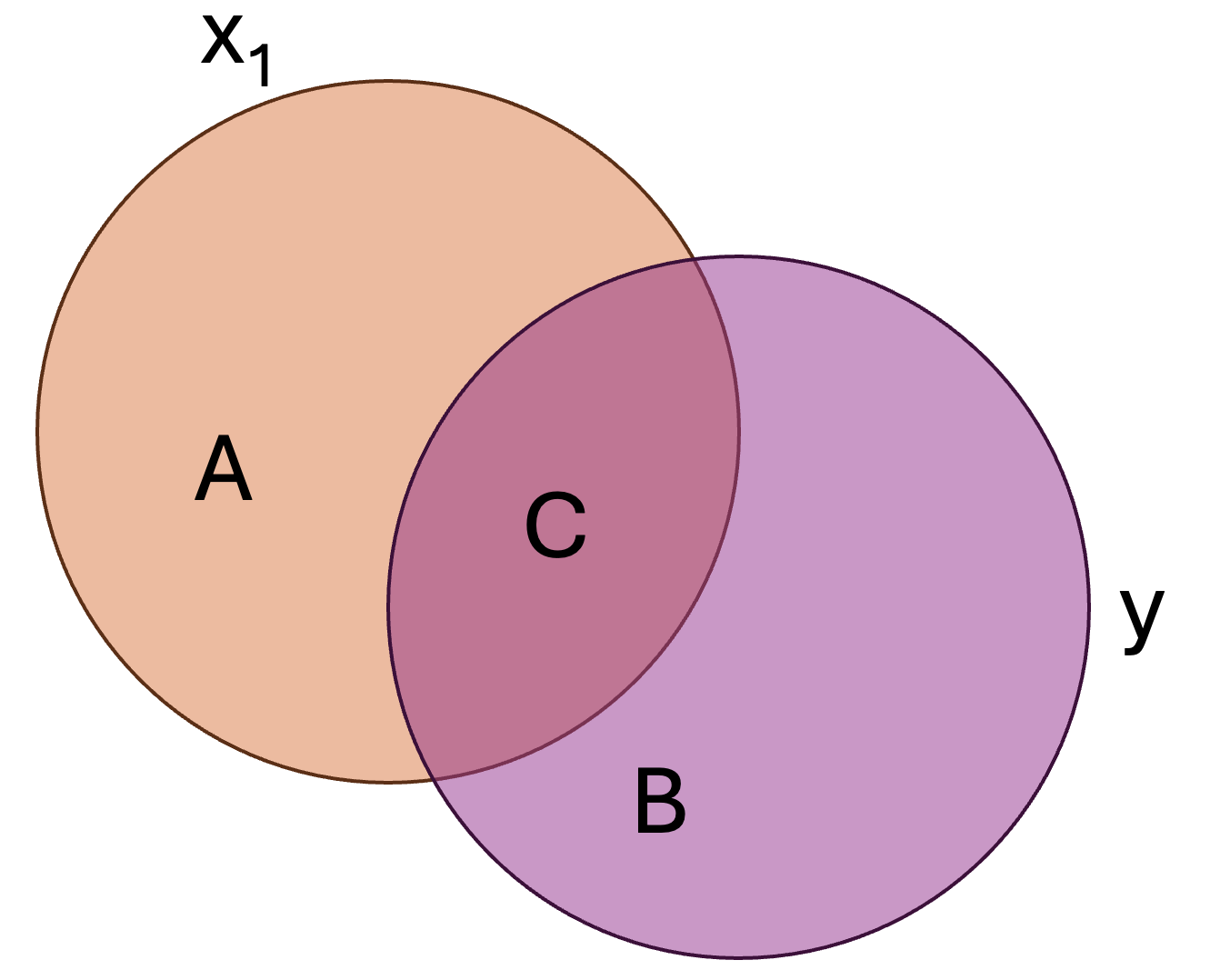

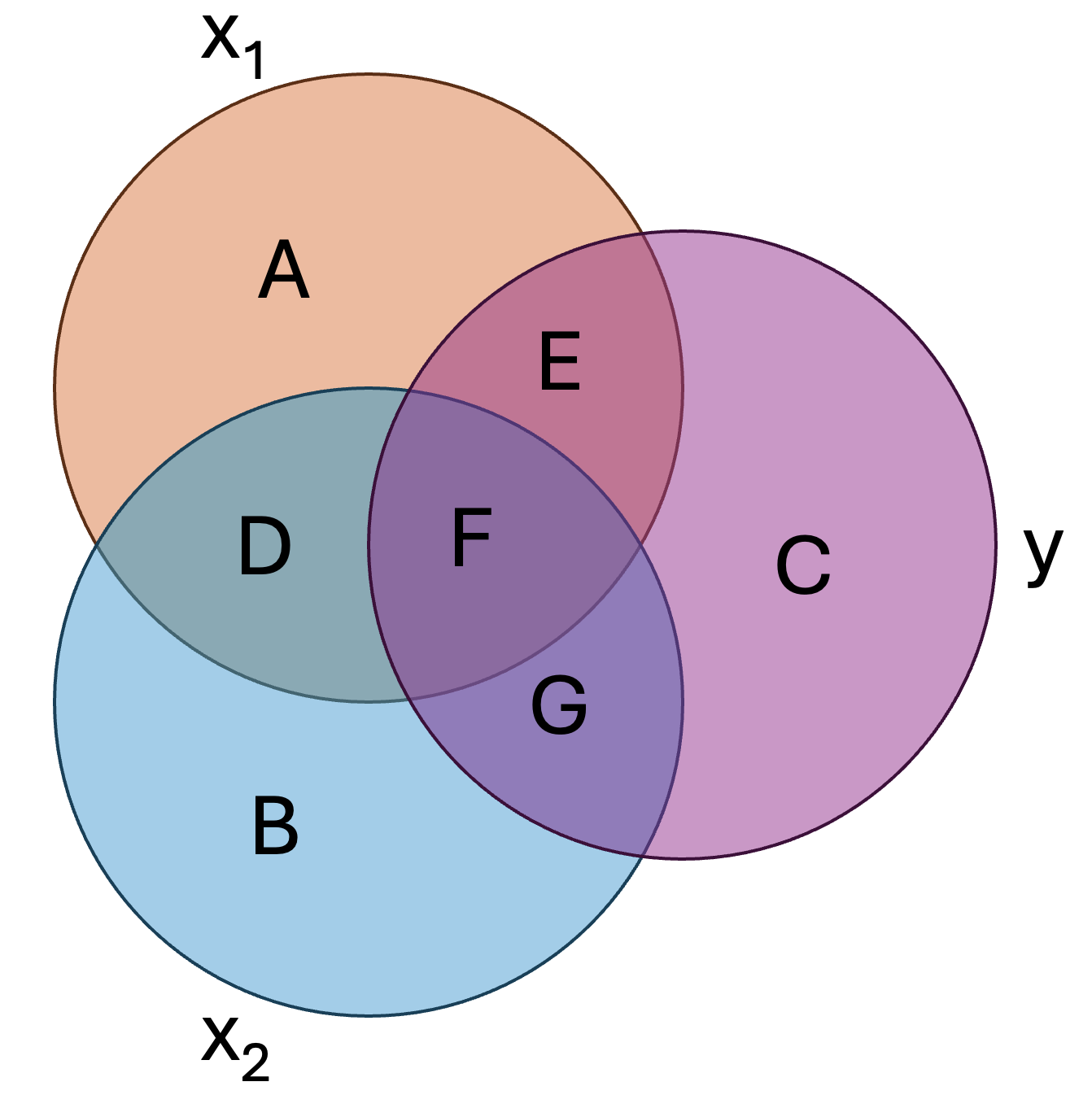

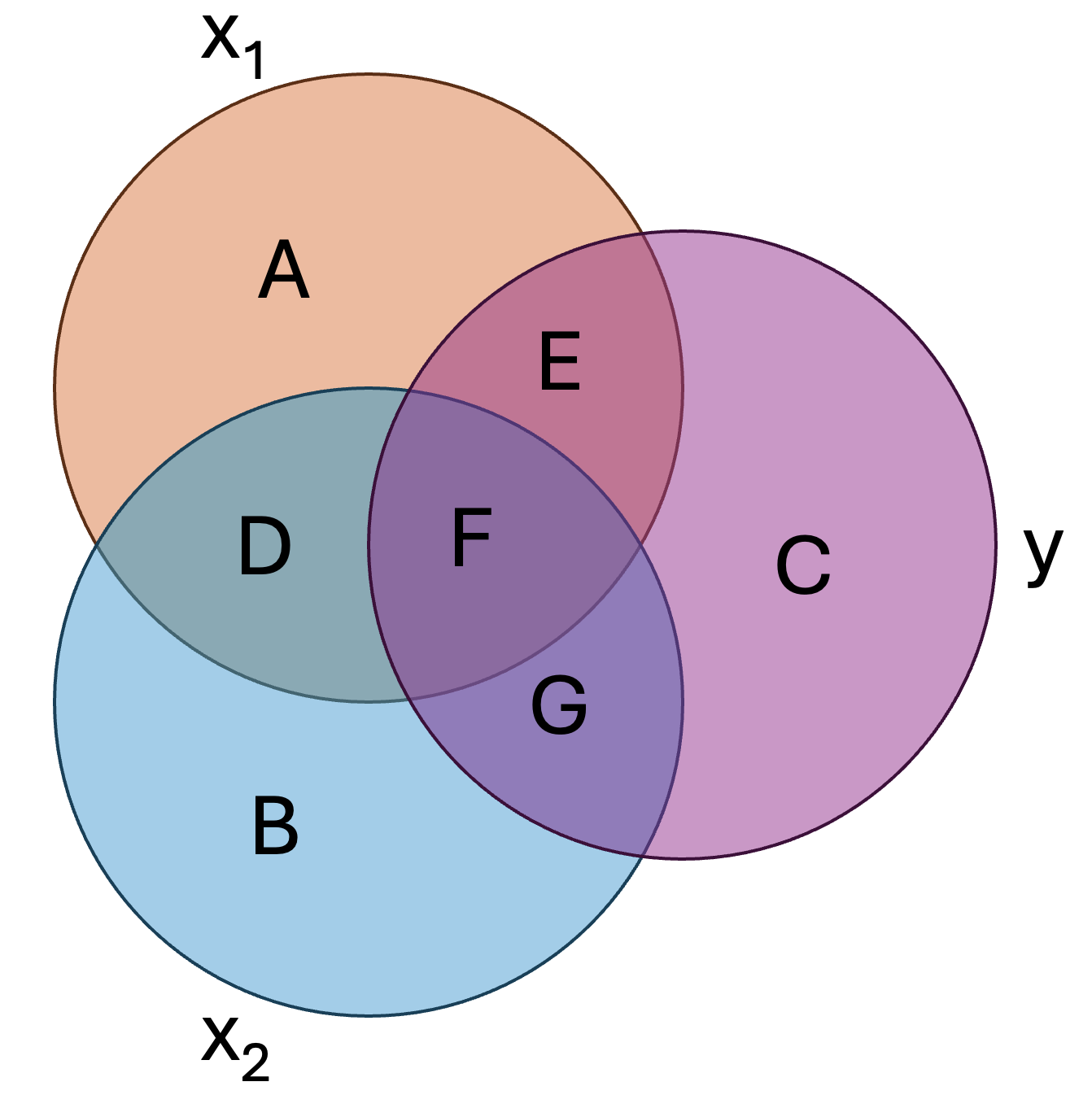

To visualize the coefficient of determination, consider the following two variables:

Here:

18.2.2 Semi-Partial Correlation (Part Correlation)

The semi-partial correlation, denoted as

For example, if we are examining the relationship between study hours (

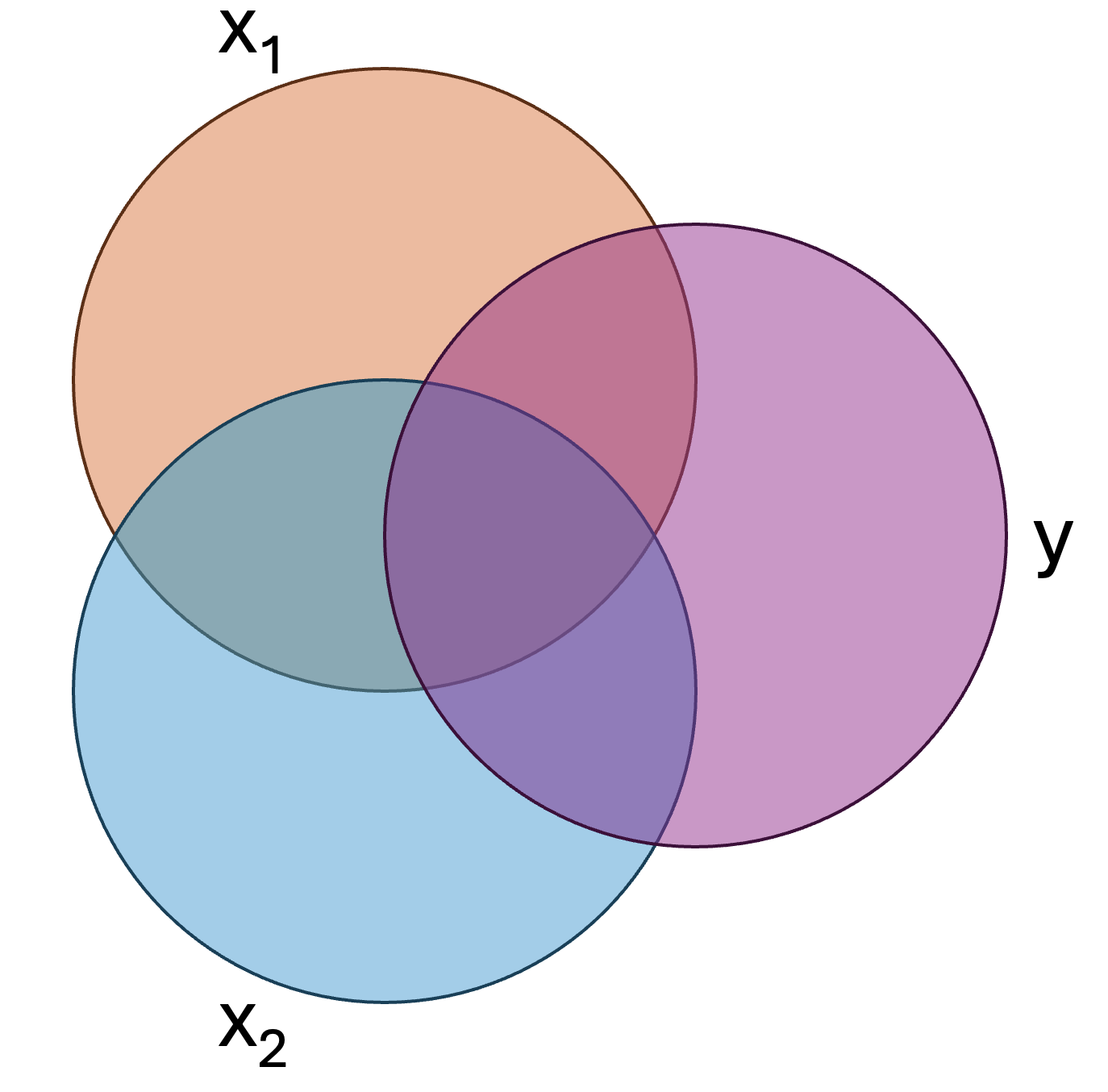

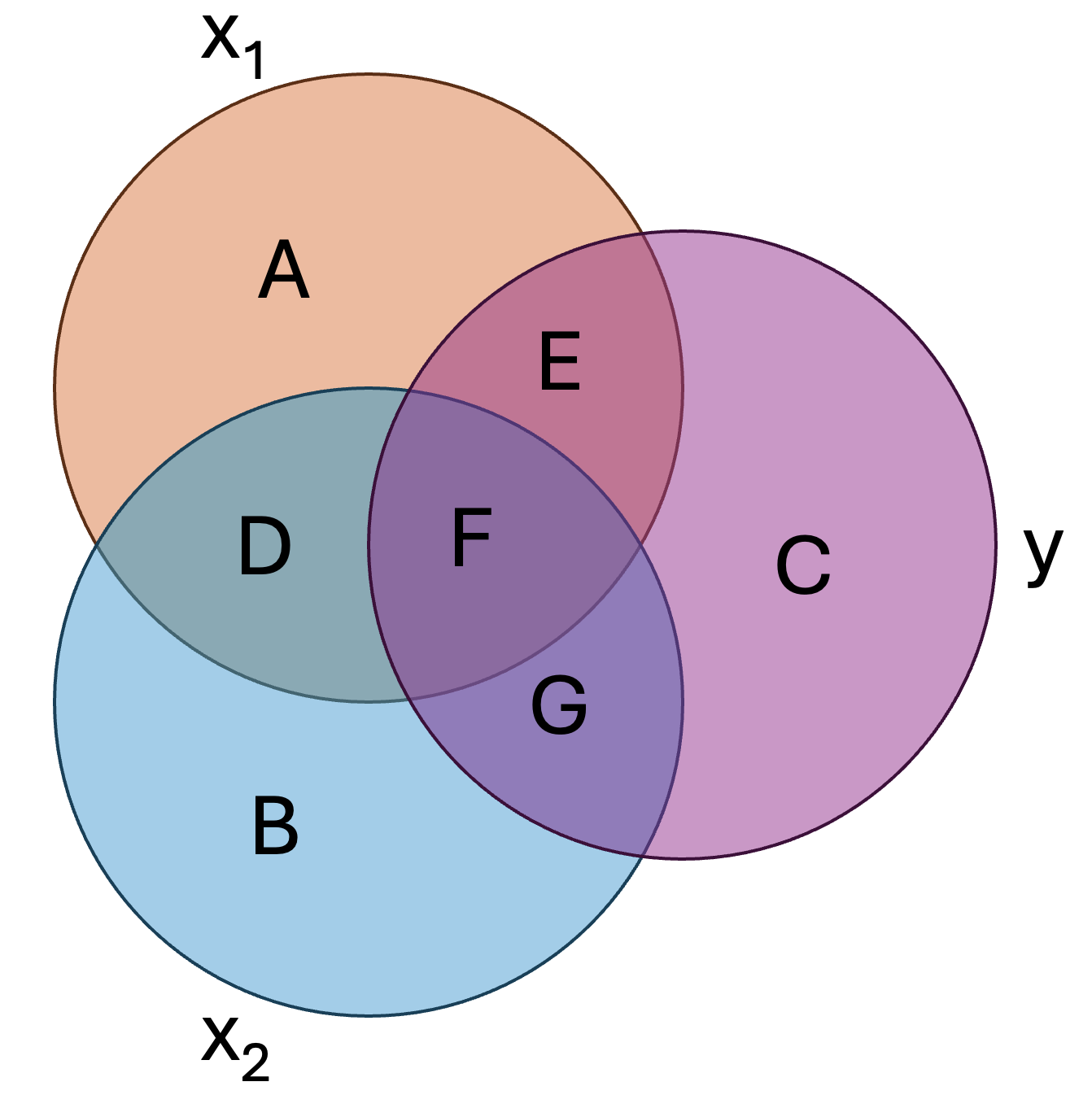

In short, it is the variance uniquely explained relative to all of criterion. Let’s not visualize this regression model, wherein we have two predictors,

We can assign each region of the above figure a letter:

In this figure:

In the regular Pearson correlation,

Or, simply:

In the above formula we are, essentially, saying that

- What regions would represent the squared semi-partial/part correlation of

- What would be the mathematical formula?

18.2.3 Partial Correlation

The partial correlation, denoted as or

Mathematically, the squared partial correlation,

The squared partial correlation of

Or, mathematically:

Both partial and semi-partial correlations help us understand how an independent variable (IV) relates to the dependent variable (DV) while accounting for other variables in a regression model. However, they answer slightly different questions. Here is a quick reference to help you.

1. Partial Correlation → “What is the pure relationship between this predictor and the outcome?”

- It tells you how much an IV is related to the DV after removing the influence of other IVs from both the predictor and the outcome.

- Example: If you’re studying how stress affects exam scores while controlling for sleep, the partial correlation tells you the direct relationship between stress and scores as if sleep was completely removed from the equation for both stress and scores.

2. Semi-Partial (Part) Correlation → “How much does this predictor add to the model’s ability to predict the outcome?”

- It tells you how much an IV uniquely contributes to explaining the DV without adjusting the DV itself.

- Example: If you add stress as a predictor to your exam scores model (which already includes sleep), the semi-partial correlation tells you how much extra variance in exam scores is explained just by stress (after removing overlap with sleep in stress but not in the scores).

In this class we will primarily use the semi-partial/part correlation–mostly the squared part correlation–in our regression analyses. With this in mind, let’s continue with a practical example involving our favorite musician, Taylor Swift.

18.3 Regression…you can do it with a broken heart.

Taylor Swift and her team are consulting you, a research expert, to help determine what features of music determine the popularity it achieves. She hopes to use your findings to write new music. Specifically, she is interested in knowing whether certain characteristics of music are more likely to get played on Spotify. Taylor and her team have a theory that they have called the “Rhythmic Positivity Theory”. This proposes that songs with higher danceability and happier tones are more popular because they elicit positive emotions and encourage social engagement. Taylor also has specific hypotheses: that both both positively valenced (i.e., happy) and danceable songs will be more popular.

18.4 Step 1. Generate your hypotheses

In regression you hypothesize about coefficients, typically referred to as

and

We will use

We use

While these are our main hypotheses, we should also try to conceptualize our study’s model. Our model can be represented as follows:

Where

18.5 Step 2. Designing a study

While Taylor has given you a $3,000,000 budget, you decide to put that money in you RRSP, cheap out, and collect publically-available data from Spotify. You decide that you will collect a random sample of songs from Spotify and use a computer to estimate the valence and danceability of the songs. These are both measured as continuous variables. You decide to use a regression to determine the effects of both variables on a song’s popularity (number of plays on Spotify in 2023, in millions). All variables are continuous (although regression can handle most variable types; ANOVA is just a special case of regression).

You do a power analysis and determine you need a sample of approximately 50 songs.Prior to conducting your research, you submit your research plan to the Grenfell Campus research ethics board, which approves your study and classified it as low-risk.

18.6 Step 3. Conducting your study

You follow through with your research plan and get the following data:

18.7 Step 4. Analyzing your data.

Matrix algebra can be used to ‘solve’ our regression equation. However, we will not use matrix algebra to solve our regression coefficients in this class. For those interested, we could using the following (see here for more information):

Where

Here, X is:

And Y is:

The results would work out to:

Where each row is

When we had one variable, we could effectively visualize a line of best fit. We can visualize a ‘plane’ of best fit when we have two predictors. For example, our data is represented as (you should be able to rotate this figure!):

As we now have more variables, the visualization becomes difficult. We struggle to interpret anything beyond 3D!

18.8 SST

Like simple regression, sum of squares total (SST) represents the difference between the observed scores on the outcome/criterion and the mean of the outcome/criterion.

18.9 SSE

Like simple regression, the sum of squares error/residual (SSE) represents the difference between the observed scores on the outcome/criterion and the predicted values of the outcome/criterion.

18.10 SSR

Like simple regression, the sum of suqares regression/model (SSR) represents the difference between the predicted values on the outcome/criterion and the mean of the outcome/criterion

Although the main R output does not provide the sums of squares for our model, knowing the above allows you to manually calculate them. For us:

[1] "SSE = 33118.68. SSR = 74669.74. SST = 107788.42."Given these, we can calculate the MSR (mean square of the regression; with

and

and

And you can look up the associated p-value in any standard critical F table. Or R can calculate it for us using pf(q=52.98, df1=2, df2=47) (the probability of F with our given).

18.10.1

Like simple regression, we can calculate an effect size (

18.10.2 Formal Analysis

There are multiple ways we can run a regression in R. We will use the basic lm() function that we used in the simple regression chapter.

taylors_model <- lm(Popularity ~ Valence +Danceability, data=taylor)and the summary of that model:

| Observations | 50 |

| Dependent variable | Popularity |

| Type | OLS linear regression |

| F(2,47) | 52.98 |

| R² | 0.69 |

| Adj. R² | 0.68 |

| Est. | S.E. | t val. | p | |

|---|---|---|---|---|

| (Intercept) | 237.42 | 15.61 | 15.21 | 0.00 |

| Valence | 1.09 | 0.70 | 1.56 | 0.13 |

| Danceability | -23.79 | 2.32 | -10.26 | 0.00 |

| Standard errors: OLS |

Also recall that the apaTables() package provides some additional information that is useful for our interpretation.

Regression results using Popularity as the criterion

Predictor b b_95%_CI beta beta_95%_CI sr2 sr2_95%_CI

(Intercept) 237.42** [206.02, 268.83]

Valence 1.09 [-0.32, 2.50] 0.13 [-0.04, 0.29] .02 [-.02, .05]

Danceability -23.79** [-28.45, -19.12] -0.83 [-1.00, -0.67] .69 [.54, .83]

r Fit

.06

-.82**

R2 = .693**

95% CI[.52,.77]

Note. A significant b-weight indicates the beta-weight and semi-partial correlation are also significant.

b represents unstandardized regression weights. beta indicates the standardized regression weights.

sr2 represents the semi-partial correlation squared. r represents the zero-order correlation.

Square brackets are used to enclose the lower and upper limits of a confidence interval.

* indicates p < .05. ** indicates p < .01.

So how might we interpret this in the context of our original hypotheses? First, consider

Second, consider the hypotheses regarding the unique predictive ability of each individual predictor, which concerns each’s

This later piece is important for interpreting regression models. A predictor’s impact is dependent on holding all other aspects of the model constant. If I added a new predictor, the whole model would likely change, including the Danceability coefficient. If I removed the Valence predictor from the model, even though it was not statistically significant, I would expect the Danceability regression coefficient to change.

18.10.3 Measures of Fit

18.11

Our effect size is similar to simple regression and represents the proportion of variance the model explains in the outcome. It represents the total contribution of all predictors and is multiple

As discussed,

18.12 Adjusted

While

The formula for Adjusted

Unlike

18.13 AIC

Akaike Information Criterion (AIC) is a fit statistic we can use for regression models (and more). The major benefit of AIC is that is penalizes models with many predictors.

For our above model:

Is this good? Bad? Medium? Hard to say. The smaller the number the better.

18.13.1 Assumptions

The assumptions for multiple regression are similar to those in simple regression, with one key addition: multicollinearity.

18.13.1.1 Multicollinearity

Multicollinearity occurs when two or more predictors in the model are highly correlated, making it difficult to determine their unique contribution to the outcome. This can inflate standard errors, leading to unstable estimates and misleading significance tests.

A common way to check for multicollinearity is by calculating the Variance Inflation Factor (VIF). Most statistical softwares will provide VIFs, such as this:

| Observations | 50 |

| Dependent variable | Popularity |

| Type | OLS linear regression |

| F(2,47) | 52.98 |

| R² | 0.69 |

| Adj. R² | 0.68 |

| Est. | S.E. | t val. | p | VIF | |

|---|---|---|---|---|---|

| (Intercept) | 237.42 | 15.61 | 15.21 | 0.00 | NA |

| Valence | 1.09 | 0.70 | 1.56 | 0.13 | 1.01 |

| Danceability | -23.79 | 2.32 | -10.26 | 0.00 | 1.01 |

| Standard errors: OLS |

A VIF > 10 suggests severe multicollinearity, though some researchers use a lower threshold (e.g., VIF > 5). If multicollinearity is detected, possible solutions include removing redundant predictors, combining highly correlated variables, or using ridge regression to stabilize estimates.

18.14 Step 4. Writing up your results

We conducted a multiple regression analysis to examine the association between Valence, Danceability, and Popularity. The overall model was statistically significant, suggesting that Valence and Danceability explain a substantial proportion of the variance in Popularity,

Examining individual predictors, the effect of Valence on Popularity was positive but not statistically significant,

Conversely, Danceability had a statistically significant negative effect on Popularity,

These results suggest that Danceability is a strong negative predictor of Popularity, while Valence does not significantly contribute to the prediction of Popularity when controlling for Danceability.