| BagID | Flavour | Weight |

|---|---|---|

| 1 | Ketchup | 203.46 |

| 2 | Ketchup | 202.53 |

| 3 | Ketchup | 189.37 |

| 4 | Ketchup | 190.32 |

| 5 | Ketchup | 203.45 |

| 6 | Ketchup | 199.86 |

| 7 | Ketchup | 200.60 |

| 8 | Ketchup | 197.85 |

| 9 | Ketchup | 191.77 |

| 10 | Ketchup | 186.04 |

| 11 | Ketchup | 193.80 |

| 12 | Ketchup | 194.04 |

| 13 | Ketchup | 194.23 |

| 14 | Ketchup | 189.66 |

| 15 | Ketchup | 198.12 |

| 16 | Regular | 206.96 |

| 17 | Regular | 201.44 |

| 18 | Regular | 209.53 |

| 19 | Regular | 195.01 |

| 20 | Regular | 204.16 |

| 21 | Regular | 206.17 |

| 22 | Regular | 198.25 |

| 23 | Regular | 200.89 |

| 24 | Regular | 197.88 |

| 25 | Regular | 198.52 |

| 26 | Regular | 191.28 |

| 27 | Regular | 205.95 |

| 28 | Regular | 191.04 |

| 29 | Regular | 201.97 |

| 30 | Regular | 213.08 |

9 Independent Sample t-test

This chapter will cover the independent t-test. Although more details follow, in short, an independent t-test is a statistical method used to determine if there is a significant difference between the means of two independent groups. Unlike the z-test, the independent t-test is used when the population variances are unknown and typically estimated from the sample data. It calculates a t-statistic, which measures how many standard deviations the difference between the two sample means is from the expected difference (usually zero). By comparing this t-statistic to a critical value from the t-distribution, researchers can determine whether the observed difference is statistically significant. Independent t-tests are commonly used in studies with smaller sample sizes or when population parameters are not available.

9.1 Some Additional Details

The t-test is used to compare two groups, which is a categorical (independent) variable (e.g., male versus female; time 1 versus time 2; treatment versus control) on some continuous outcome (dependent) variable.

NEED TO ADD CHAPTER ON RESEARCH DESIGN OF BUILD THESE CONCEPTS INTO SCIENTIFIC PROCESS. In most experiments, we aim to both randomly sample participants from the population and randomly assign them to different groups. This process helps ensure that the groups are comparable, so any differences in the outcome can be attributed to the grouping variable. In other words, any changes in the dependent variable (DV) are assumed to result from the independent variable (IV).

If you recall, the null hypothesis typically purports that there is no difference/association. Thus, imagine we are comparing the means two groups: 1 and 2. The null hypothesis states:

and the alternative hypothesis states (for a two-sided test)

In the last chapter we learned about the z-test, which carries a somewhat unrealistic assumptions: that we know some population variance parameter. Most times we do not know the population standard deviation or variance, so we must estimate it using our sample data. Furthermore, in last chapter we simulated the distribution of a sample’s means over and over to demonstrate central limit theorem. In a t-test, we are no longer interested in one mean, but two. We also have two potential standard deviations. Thus, our analysis is slightly different.

9.2 Betcha’ can’t eat just two…?

Imagine we wanted to model the average weight of bags of two different flavours of Lay’s Potato Chips. Let’s use our scientific method, as discussed in an earlier chapter, to conduct some science.

Specifically, you have a new theory: Lays purposely puts fewer chips in a bag of Ketchup Chips than Regular Chips because the seasoning in Ketchup Chips costs more to produce. Based on this, you hypothesize that 200g bags of Ketchup Chips weigh less than 200g bags of Regular Chips.

9.3 Step 1. Generate your hypotheses

Your hypothesis can be translated into a statistical hypothesis, represented as the null and alternative hypotheses:

You email Lays, and they respond similarly to before: “All our bags weigh, on average, 200g regardless of flavor! Also, we don’t know the standard deviations of ALL the flavours…measure them yourself! And, oh…stop emailing us!”

9.4 Step 2. Designing a study

Determined to investigate further, you plan to purchase two cases of chips (15 bags each) directly from Lays: one case of Ketchup Chips and one case of Regular Chips. Your total sample size is 30 bags–15 Ketchup, 15 Regular.

Once you have the chips, you will weigh each bag using a professionally calibrated scale, ensuring the weights reflect only the chips themselves, excluding the bags. You decide to use null hypothesis significance testing (NHST) to analyze your data with a significance level of

Prior to conducting your research, you submit your research plan to the Grenfell Campus research ethics board, which approves your study and classified it as low-risk.

9.5 Step 3. Conducting your study

You follow through with your research plan. You get the following data:

And we can summarize the data:

| Flavour | Mean | Min | Max | SD |

|---|---|---|---|---|

| Ketchup | 195.673 | 186.04 | 203.46 | 5.615633 |

| Regular | 201.475 | 191.04 | 213.08 | 6.359418 |

It is also helpful to visualize the data using a graph/plot. There are several options for a t-test (see an earlier chapter regarding ways to visualize data). For now, we will create a dot plot that has a dot for each bag of chips. The y-axis represent the weight, and the x-axis represent the flavour. I have added some slight x-axis movement within each flavour to prevent dots from overlapping.

9.6 Step 4. Analyzing Data

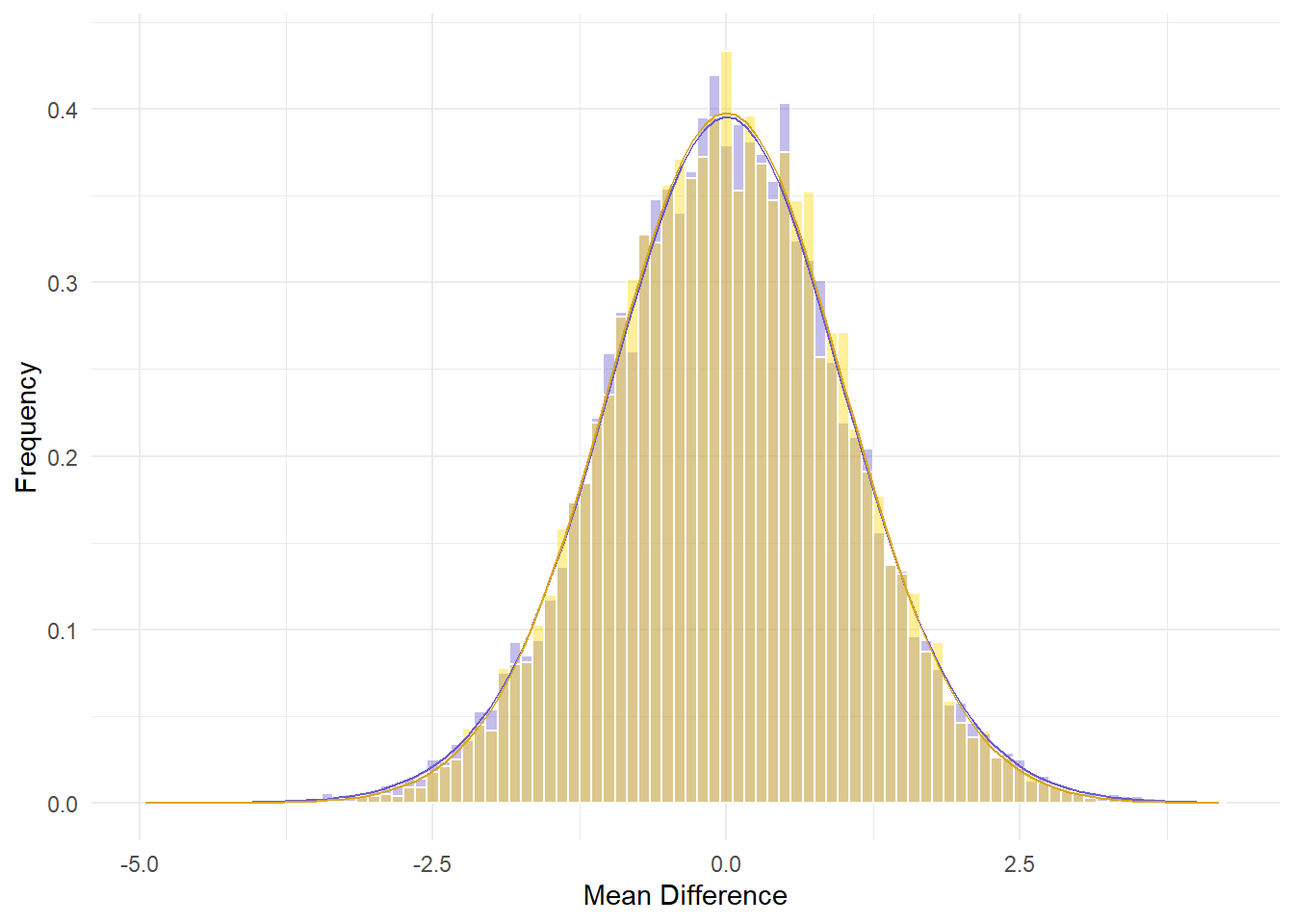

If you recall the concept of the distribution of sample means from the z-test chapter, you know that sample means vary due to random sampling. For example, even if a population mean is 200g with a standard deviation of 6g, taking a sample from that population might not give us exactly 200g every time due to this variability.

We can apply this same logic to the differences between two groups’ means. Even if the true means of both groups are identical, sampling variability will cause the observed difference between the sample means to deviate from 0. In other words, we may see some differences between the two groups’ sample means, even if both are drawn from the same population or populations with identical means.

A t-test helps us assess whether the observed difference between these sample means is large enough to be statistically significant. By using a pre-specified significance level (like

We will need several pieces of information prior to analyzing our results.

9.7 Deriving the Distribution of Differences in Sample Means

The distribution of differences in sample means, known as the sampling distribution of the difference between two means, is derived from the individual sampling distributions of each group’s mean.

9.7.1 1. Sampling Distribution of Each Group’s Mean

For each group in an independent t-test, the sampling distribution of the sample mean is normally distributed (or approximately normal for large enough sample sizes, due to the Central Limit Theorem). The mean of each group’s sampling distribution is the population mean (

- For Group 1, the standard error of the mean (SE1) is:

where

- For Group 2, the standard error of the mean (SE2) is:

9.7.2 2. Sampling Distribution of the Difference Between Means

To obtain the sampling distribution of the difference between the two sample means (

If the null hypothesis (

9.7.3 3. Standard Error of the Difference Between Means

The variability (spread) of the distribution of the difference between means is captured by the standard error of the difference. This is calculated by combining the standard errors of both groups. If we assume that the variances of the two groups are equal, we use the pooled standard deviation to compute the standard error of the difference:

Where

The pooled standard deviation (

or, an alternative formula for pooled variance follows. You can take the square root of the following to obtain the former. It is often called the weighted variance form:

9.7.4 4. t-Distribution

The sampling distribution of the difference between means follows a t-distribution when the population variances are unknown. The degrees of freedom (df) for this t-distribution are:

This t-distribution is used because we are estimating the population variances from the sample data, and it accounts for the uncertainty associated with that estimation.

9.7.5 5. Calculating the t-statistic

The t-statistic is calculated by comparing the observed difference between the two sample means to the expected difference under the null hypothesis. The formula is:

Where: -

The t-statistic tells us how many standard errors the observed difference between means is away from 0 (the expected difference under the null hypothesis). A large t-value suggests that the difference between the means is unlikely to have occurred by chance, leading to the potential rejection of the null hypothesis.

9.7.5.1 Assumptions

There are several assumptions we must have when testing from the t distribution.

- First, the data are continuous. For our purposes, this will be interval or ratio data.

- Second, the data are randomly sampled.

- And third, the variance of each group is similar.

9.7.6 Welch’s t-test

So far we have used formulas from Student’s t-test. Specifically, Welch’s t-test is an alternative test that is more robust to unequal group variances and smaller sample sizes. Welch’s alters the denominator for the t-test in the equation to:

Thus, the overall equation for Welch’s t-test is:

Furthermore, Welch’s t-test alters the degrees of freedom (v) to:

Importantly, there are no major disadvantages to using Welch’s versus Student’s and you should probably use it in your own research. R’s function t.test() automatically uses Welch’s t-test.

For the purposes of this course, we will use Student’s t-test for our hand calculations. However, you can use Welch’s t-test for any analyses conducted using statistical software.

9.7.7 Effect Size

Cohen’s d is the standard effect size estimate for a t-test. It provides us with an estimate of the standardized mean difference. It is:

This is a standardized effect size that be compared across groups of metrics. Please review the chapter that discussed how to best determine meaningful effect sizes. However, Cohen suggested the following cut-offs:

- Small -

- Medium -

- Large -

9.7.8 Ketchup a rip-off?

Let’s apply this to our chips example. We have all the data to calculate our t-statistic.

| Flavour | Mean | Min | Max | SD |

|---|---|---|---|---|

| Ketchup | 195.6733 | 186.04 | 203.46 | 5.615633 |

| Regular | 201.4753 | 191.04 | 213.08 | 6.359418 |

We calculate our squared differences between each bag and the mean of that group, which will be needed later. Not that in the following table, the last column is the squared difference between the weight of a bag of chips (

| BagID | Flavour | Weight | (x - x̅)^2 |

|---|---|---|---|

| 1 | Ketchup | 203.46 | 60.6321778 |

| 2 | Ketchup | 202.53 | 47.0138778 |

| 3 | Ketchup | 189.37 | 39.7320111 |

| 4 | Ketchup | 190.32 | 28.6581778 |

| 5 | Ketchup | 203.45 | 60.4765444 |

| 6 | Ketchup | 199.86 | 17.5281778 |

| 7 | Ketchup | 200.60 | 24.2720444 |

| 8 | Ketchup | 197.85 | 4.7378778 |

| 9 | Ketchup | 191.77 | 15.2360111 |

| 10 | Ketchup | 186.04 | 92.8011111 |

| 11 | Ketchup | 193.80 | 3.5093778 |

| 12 | Ketchup | 194.04 | 2.6677778 |

| 13 | Ketchup | 194.23 | 2.0832111 |

| 14 | Ketchup | 189.66 | 36.1601778 |

| 15 | Ketchup | 198.12 | 5.9861778 |

| 16 | Regular | 206.96 | 30.0815684 |

| 17 | Regular | 201.44 | 0.0012484 |

| 18 | Regular | 209.53 | 64.8776551 |

| 19 | Regular | 195.01 | 41.8005351 |

| 20 | Regular | 204.16 | 7.2074351 |

| 21 | Regular | 206.17 | 22.0398951 |

| 22 | Regular | 198.25 | 10.4027751 |

| 23 | Regular | 200.89 | 0.3426151 |

| 24 | Regular | 197.88 | 12.9264218 |

| 25 | Regular | 198.52 | 8.7339951 |

| 26 | Regular | 191.28 | 103.9448218 |

| 27 | Regular | 205.95 | 20.0226418 |

| 28 | Regular | 191.04 | 108.8961818 |

| 29 | Regular | 201.97 | 0.2446951 |

| 30 | Regular | 213.08 | 134.6682884 |

So, for bag 1:

Let’s fill in the missing data to compute our t-statistic. We have:

You may wish to look the p-value of the resulting test up in a critical value table. However, most likely you will use statistical software to provide you with an exact p-value. The following is the formal results of our t-test:

9.7.9 Cohen’s D

Recall that:

From our above means and pooled variance, we have:

9.8 Step 5: Write up your results/conclusions

A two Sample t-test testing the difference of weight of bags of chips by Flavour suggest that Ketchup chips (

9.9 Conclusion

an independent t-test is a statistical method used to determine if there is a significant difference between the means of two independent groups. Unlike the z-test, the independent t-test is used when the population variances are unknown and typically estimated from the sample data. It calculates a t-statistic, which measures how many standard deviations the difference between the two sample means is from the expected difference (usually zero). By comparing this t-statistic to a critical value from the t-distribution, researchers can determine whether the observed difference is statistically significant.